Segmented A/B tests: Avoiding average experiences

One of the biggest mistakes a company makes when A/B testing is selecting an average audience. Instead, they should be making segmentation an essential part of their test design — here’s how.

Summarize this articleHere’s what you need to know:

- A/B testing is most effective when you target specific audience segments based on demographics, behavior, and interests. This allows you to deliver more relevant experiences and gather more actionable insights.

- Begin by targeting high-traffic segments to gather initial data, then use those findings to refine your testing strategy and target smaller, more specific groups.

- Consider factors like purchase history, recency of visit, location, and even past A/B testing results to create highly targeted segments that yield meaningful data.

- Focus on the “why” behind your tests. Clearly define what you hope to learn from each A/B test. What customer behaviors or preferences are you trying to understand? What specific actions do you want visitors to take?

- Don’t just collect data, gain insights. Use the results of your A/B tests to inform strategic decisions about your website, product offerings, and overall business model. A/B testing should be used to drive continuous improvement, not just generate random data.

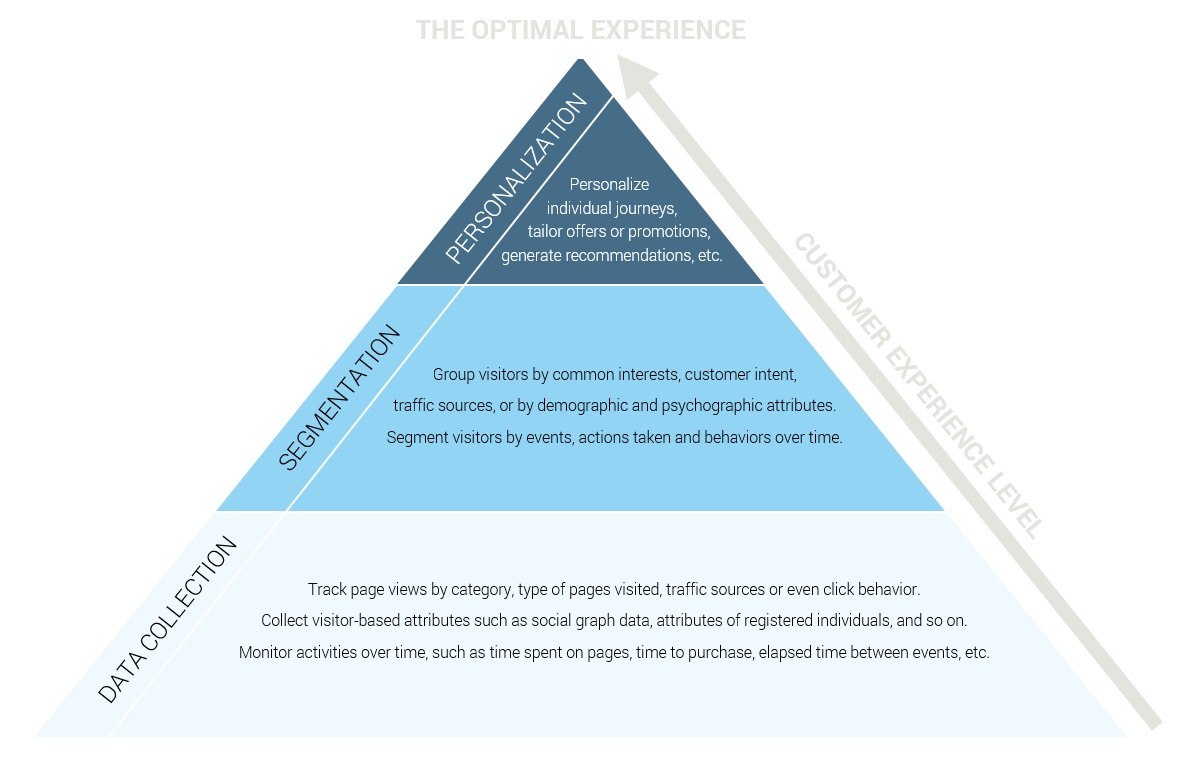

One of the biggest mistakes companies make when they begin A/B testing efforts in earnest is sticking to a single, simple audience. The problem being, if you want relevant, insightful results, you need a segmentation and personalization strategy in place.

Targeting the “average” person, usually in the form of “all visitors,” is an easy trap to fall into. The idea is that by casting a wide net, you can catch everyone. In reality, there is no such thing as an “average” visitor. Sure, your website has a bell curve of visitors but that bell curve is actually made up of subgroups of visitors, each with their own bell curves in different places.

I guess a good place to start would be to ask you this:

Are YOU average?

Chances are that in some ways you’re average, but in some very important and distinct ways, you’re also not.

In terms of your website visitors, some common examples of subgroups are:

- New vs. returning visitors

- Men vs. women

- Mobile visitors vs. desktop

- Loyal customers vs. one-time shoppers

Each of these groups will have distinct affinities, and by targeting those groups specifically while running experiments, you can increase the likelihood that you’ll find that group’s drivers for higher conversion rates or increased average order value.

To illustrate why testing against a single unified audience is bad, let’s say that you have an eCommerce store that sells outdoor clothing, and 80% of your visitors are men. If you decide to test a new hero image at the top of the page — one focusing on men’s clothes, the other focusing on women’s — chances are that the male-focused one will always win. Running the test didn’t actually tell you anything that you didn’t already know.

Worse, that same result could actually be masking higher-than-expected results from the women’s hero image. The quickest path to a higher conversion rate may actually be a matter of identifying female visitors and targeting them with relevant content, but that’s not the conclusion you’re likely to get from an “all visitors” unified test. This is just one example of why segmentation is essential in good test design.

Designing your next set of A/B tests

1. Don’t go overboard with segmentation

It’s one thing to emphasize certain aspects of the site for a specific segment, but you don’t want to be too extreme in your tests. If you know that the person visiting your site is a woman, it makes sense to de-emphasize men’s products. At the same time, you don’t want to completely remove those products from the site experience, because you don’t know what she’s shopping for. She could be buying a gift for her boyfriend or husband, for example.

2. Start big, then get smaller

It’s helpful to start grouping your largest segments first, then work your way into smaller and smaller ones. Larger segments will have more traffic, making it faster and easier to reach statistical significance — so deal with that lower hanging fruit first.

With the easy stuff knocked out quickly, you can then take your time designing more granular tests for specific segments. The same holds true for various sections of your website – work with higher traffic pages like your homepage first, and only then move towards other, lower traffic pages.

3. Refine your segments

One of the most basic segmentation divisions is between new and returning visitors. It’s a good starting point, but those groups can also be broken down even further. For instance, you can split returning visitors by those who have completed a purchase versus those who haven’t. You can also segment out high-value customers — those who have a high average order value in their carts, complete their purchases, and make fewer returns — versus “tire kickers” who commit to a purchase less often. The more distinct your segments, the more accurate your results become.

4. Weigh site activity and recency in your tests

A shopper who visits your site several times a week isn’t the same as one who hasn’t been on the site within the last 90 days. Even if they are both technically “returning” visitors, odds are that they will respond differently.

5. Segment by customer value

Some customers are more valuable than others, and you should definitely optimize the site for your highest-value customers. Start by identifying the “whales” — your highest value customers. This allows you to emphasize higher-priced products by default, and to suggest products that are preferred by other shoppers with a high average order value.

6. Consider geography and weather

Some states charge sales tax for online purchases while others don’t. This creates two distinct — and often overlooked — customer segments. Why not test offers for people who live in tax-free states with messaging that highlights the fact that the purchase will be tax-free? As simple as the tactic seems, it will encourage spending a little more.

Along the same lines, weather-based targeting is worth keeping in mind. For example, a person who lives in a city that is experiencing a major snowstorm probably isn’t going to shopping for patio furniture. That same audience, however, may respond strongly to deals on winter clothes and space heaters.

7. Focus on the “why” of the test, not the “what”

When people are new to A/B testing, they generally don’t think through test design. They focus on the “what” of the test — what button size and color combination will generate the most clicks, for example — while overlooking the “why” behind it. Without a central hypothesis driving these tests, the individual test results aren’t all that meaningful – “what” based testing initiatives quickly run out of ideas and lose steam.

It’s not enough to know that one offer performed higher in an A/B test within a certain segment.

- What are the psychological implications of the test?

- What does it say about this group of customers?

- How can the lessons be generalized or used to inform decisions across the entire business?

The better, more meaningful tests, as a rule, have the largest, most far-reaching implications for the business as a whole.

8. Keep your test ideas fresh

It’s easy for people to get stuck in a rut with their testing strategies, or to find their test ideas clustering around one specific kind of test. Design-oriented thinkers often get lost testing endless variations on a UI design, even as those tests yield diminishing returns. If you want your tests to generate clear, impactful insights, you need to try different kinds of tests. You can test any element of your site — offers, copy, images, layout, user experience — and by any segment, so why not take advantage of it?

One final thought

Just because a test gives meaningful results doesn’t mean that the test has merit. Your tests should also have actionable insights into both user psychology and the overall business model. You can create a test offering designer t-shirts for $1 each, and it will definitely provide clear results. Does that mean that selling t-shirts at a heavy loss is a good business decision? Obviously not, but there are countless examples of people making very strategic moves based on optimization tests that are just as flawed.

When possible, your A/B tests should do more than just produce a winner and a loser. They should validate business assumptions, identify new product areas, and tell you more about the people who spend their time with you.