There are no failed A/B tests: How to ensure every experiment yields meaningful results

With a comprehensive testing methodology and a renewed perspective toward experiment analysis, marketers can start gleaning meaningful insights from every A/B test, no matter the outcome.

Summarize this articleHere’s what you need to know:

- Shift your mindset: A/B tests are for learning, not just winning or losing.

- Treat it like science: Define your question, hypothesis, and variables for clear results.

- Look beyond revenue: Analyze all findings, even unexpected ones, to understand user behavior.

- Flat and negative results are valuable: They expose audience segments and guide further tests.

- Personalize your experiments: Target specific segments for more impactful results.

Up until the late 19th century, the rational world believed there was an invisible, mysterious substance, swimming through light and sound, surrounding the world – aether. Scientists Albert A. Michelson and Edward W. Morley set out to use their own, proprietary technology to prove the existence of aether once and for all. To their (and the world’s) shock, their experiment concluded quite the opposite: aether did not exist. This would later become known as the most famous “failed” experiment in history. And while it disproved their hypothesis, it ultimately shifted the world’s understanding of the fundamental workings of the universe, eventually leading to Einstein’s theory of relativity, now known as one of the two pillars of modern-day physics.

Michelson and Morley failed spectacularly, setting a new standard for how we think about experimentation. Today’s marketers use the same language and methodology as scientists when testing – beginning with a hypothesis and concluding with a result. Yet marketers, hungry for conclusive results or a specific, desired outcome, often fail to see beyond black-and-white results, referring to one test as a winner and another as a loser. The reality, however, is that there is no such thing as a failed test; there are only insights.

Below, learn more about establishing a foolproof testing methodology, destined to yield impactful learnings, as well as why there is no such thing as a failed test, illustrated by the lessons from some of the world’s leading eCommerce brands.

Reframing a marketer’s approach to testing

Your typical A/B test is often attached to a KPI. For example, a retailer looking to choose which recommendation strategy will drive more add-to-carts – and eventually more revenue – is invested in determining which variation will drive more action. Ultimately, the marketer has a vested interest in identifying how to use testing to generate revenue, and if a test fails to outpace the status quo, it is typically considered a failure.

However, there is so much to learn from a failed test. Every experiment comes with a unique set of learnings, no matter the outcome, and today’s practitioners must unlearn the concept of failed experimentation and embrace a more scientific approach to testing. Doing so won’t simply inform future tests; it will also help organizations better understand and reimagine experience creation and the end-to-end user experience, likely to pay dividends in the long-run.

An airtight approach to A/B testing

Like we see in science, marketers can yield the maximum value from an experiment when they approach tests methodically. Lucky for you, we’ve built out our own scientific method – a seven-step, full-proof testing plan, essential for drawing conclusions and deriving meaningful insights from any test.

1. Ask a question

Questioning an unknown and seeking the solution is the impetus for your experimentation, and identifying a central question will drive your entire experiment. The question at hand will not just inform your hypothesis; it will also provide you with a jumping-off point from which to base your educated guess.

In order to walk through each step of the experimentation process, let’s focus on a specific example: Will offering a discount to new users increase revenue per user?

2. Refine your question into a hypothesis

Before coming up with a hypothesis, use your existing knowledge to your advantage. Any information already available about your business and your customers can inform your approach to testing and help you refine your central question before moving ahead with experimentation. If you’ve researched or tested the performance of discounts or incentives in the past, apply those learnings here.

Let’s say you’ve found that new users are highly price-sensitive and rarely order $60 or more. This is a strong indicator that incentives will be effective if they reduce the net price below $60.

Refine your experiment so the incentive drops the average order value (AOV) below $60. 10% off or more should do that.

Now, consider refining your question into your best guess, a hypothesis: Offering a discount of over 10% for new users will likely increase revenue per user.

3. Identify the variables

By narrowing down the question at hand, we are able to identify the major variables in our experiment. Every experiment has at least one independent variable and one dependent variable. Here, the independent variable is the discount, and the dependent variable is revenue because the discount is what may impact revenue. Meanwhile, the control you’re testing performance against is offering no discount to a new user.

Always use variables that will provide a clear path forward after the test concludes. For example, test 10%, 15%, and 20% discounts – each as its own variation within a single test – to understand if the incentive amount matters and whether or not it should be increased or decreased in future tests.

We are left with at least one unidentified variable: audience membership. We have new users from a number of different sources, including organic search, paid search, direct traffic, and more, and each of these factors can influence how an individual responds to the experiment. Additionally, always be sure you can track how a campaign performs for important audiences before you begin testing, and limit yourself to testing variables that are truly relevant to your experiment.

4. Establish proper measurement practices

A lack of uplift ≠ a failed test. A test only “fails” if it is not set up properly, yielding unreliable or unreadable results. If you suspect your test is impacting multiple dependent variables, be sure to establish how you will properly measure the impact for each ahead of time to ensure your results are as accurate as possible.

5. Run the experiment

Now that you’ve identified the question, variables, and how you’ll measure your results, it’s time to run the test. We recommend running it for at least 30 days to reach statistically significant results. Be sure to consider your audience variables – the traffic sources, in our example – as well, as it may take longer to reach statistical significance at the audience level, even if your overall experiment has reached statistical significance.

6. Evaluate the results

It’s important to remember that testing isn’t simply about producing more revenue; it’s about acquiring knowledge. You can only increase your bottom line once you’ve generated learnings that you can leverage to positively impact testing outcomes and optimize performance in future campaigns.

Look at more than whether or not a test resulted in positive or negative uplift and instead, go back to your hypothesis and determine whether or not it was proven to be accurate.

A few questions you can pose after the experiment has run its course include:

- Did every audience respond the same way?

- Did the results uncover any other unexpected variables?

- Are there any additional dependent variables (i.e. conversion rate)?

- How did the degree of the variable impact the outcome? (i.e. Did a 10% discount produce different results than a 20% discount?)

7. Optimize your campaign

After conducting a thorough evaluation of your results, you should have an answer to the original question you posed. Use these results to inform your next steps, whether it is to adopt a practice into your marketing strategy or run additional tests to reach more granular results. These insights on audience performance and the impact each independent variable has on your KPI can help you set up additional experiments that are more personalized, serving each salient audience with variations they will most likely resonate with.

Breaking down the true impact of every test outcome

Now that you have all the tools you need to run an experiment, let’s talk a bit more about assessing your results. An experiment can sometimes result in a flat test, and while often feared, they present a powerful learning opportunity. A flat test is a test that concludes with no significant difference in performance between the control and the variation(s). And there are a number of reasons a test can be flat: timing, audience size, or an unforeseen variable, for starters. The reality, however, is that a flat test and tests that result in negative lift can eventually lead to larger uplifts down the road.

The magnitude of lift depends on the scale of change, and making big changes can be scary; they open the door to potentially negative results. However, if fear stifles risk-taking, your business will never be able to truly undergo significant transformation and growth. Take comfort knowing that few tests are ever flat for everyone. There will always be a certain audience that responds positively, you just have to figure out which audience that is.

Let this reality be a source of optimism for future revenue growth, and remember: your personalization program will thrive when risk-taking is encouraged because the learnings you gather will be invaluable.

How Chal-Tec turned risks into dividends

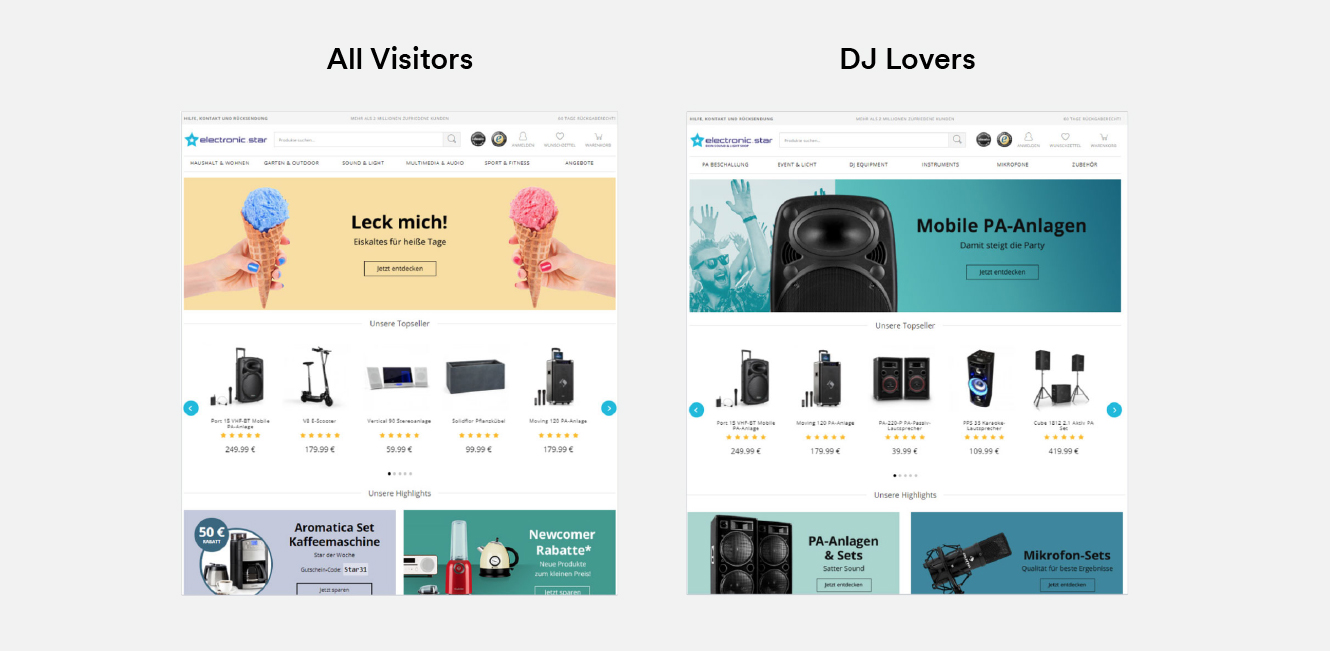

Consider a campaign run by the giant multi-category retailer, Chal-Tec. They started out by specializing solely in DJ equipment, but over time, eventually expanded into multiple categories. While this expansion increased overall revenue, they began to lose touch with their core DJ audience.

Chal-Tec was able to get back to its roots because they did not fear flat test results. They launched a massive experiment where they personalized the entire site experience to their DJ audience, changing everything from the logo to the navigation options. The scale of these changes ensured one thing: the outcome would reveal dramatic results, either positive or negative.

The company designed this test to ensure their learnings would have clear takeaways and action items, ones that would transform their future on-site marketing strategy and how they build customer experiences:

- A negative or flat lift would push them to rethink their assumptions about the audience and consider sub-audiences that may respond positively to this test.

- A positive uplift would be the affirmation they need to move forward with their personalized approach for DJ equipment enthusiasts.

Ultimately, the outcome of their test was rewarding, resulting in a 37% increase in performance. For businesses like Chal-tec, these results and learnings are invaluable, as they not only help them achieve their test objective, but also help them combat a larger business objective – learning how to tap experience creation to remain relevant during the era of Amazon.

Instances where unexpected results leave the biggest mark

Working with more than 350 global brands to implement experiments, we’ve seen our fair share of “failed” tests. However, we have also witnessed how unexpected results can work to a team’s advantage.

An American underwear manufacturer determined how to better allocate its marketing dollars

The manufacturer was worried their homepage content was getting stale. They invested in producing more high-quality photography so they could refresh their homepage content several times per month. They then decided to run a test that would prove this investment was generating revenue. They ran an A/B test for two months, bucketing site visitors into two different groups for the duration of the experiment.

The test set up was as follows:

- Control: Content updates 1x per month

- Variation: Content updates 2x per month

They were surprised to find the variation produced no uplift in revenue against to the control. Rather than dismissing this as a failed test, the company changed their assumptions about the value of refreshed content and decided to no longer invest heavily in photography. And while the test didn’t produce more revenue directly, it gave them the insight they needed to properly divvy up their budget and avoid wasteful spending.

A U.S. fashion retailer discovered how to optimize its discount codes

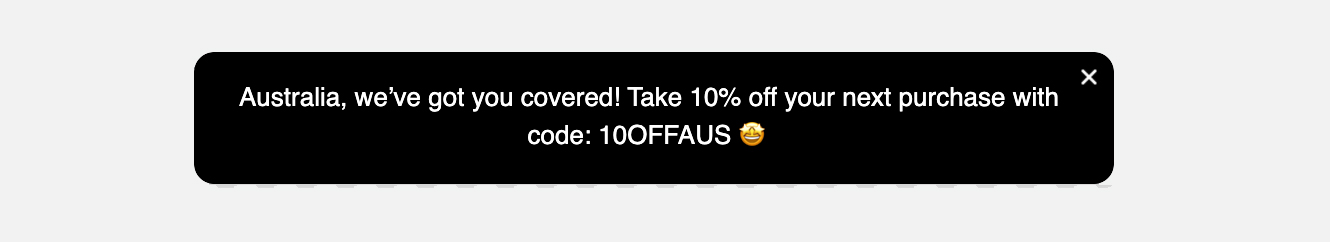

The fashion label decided to run a promotion offering 10% off to their Australian shoppers using a simple notification with an offer code.

They hypothesized that offering 10% off would drive conversions, boosting average revenue per user (ARPU) by 5-10%. But what actually happened was a decrease in conversions by 23%. After investigating further, they discovered a moderating variable that turned this test into a key insight. When users were primed to get the discount after reading about it on a referral partner’s website, shoppers loved the continuity that the notification provided. As a result, when they ran a second test, they only targeted referral traffic and witnessed a 14% uplift in revenue.

Start reassessing your assumptions

Failed tests should push you to reassess your existing assumptions because when what you anticipate is closer in-line with reality, you’ll be able to make smarter business decisions. To yield the maximum value from a test result, be prepared to react to negative or flat results. Look into why a test performed a certain way, dig into the results at the audience level, and build processes that will help you answer why an experiment turned out the way it did. Prepare for the unexpected, and remember: sometimes the results you didn’t expect are the ones with the potential to make the biggest impact.