Beyond A/B testing: Multi-armed bandit experiments

Learn how multi-armed bandit algorithms conclude experiments earlier than the classic A/B test while making fewer statistical errors on the way.

Summarize this articleHere’s what you need to know:

- Multi-armed bandit experiments allow you to personalize content and target individual users, leading to faster results and improved efficiency.

- Break free from waiting for statistical significance: Unlike traditional A/B testing, bandit algorithms continuously allocate traffic based on performance, optimizing for results in real-time.

- Bandits can identify individual user preferences and deliver the most effective variations, maximizing engagement and conversions.

- Bandit experiments require less data than traditional A/B tests, making them ideal for situations with limited traffic or resources.

- Personalize offers, content, and recommendations based on individual user behavior for a more relevant and impactful customer experience.

Over the past decade or two, our ability to integrate, analyze, and manipulate data has vastly improved. Conversion optimization continues to be key to digital strategy, and A/B testing and experimentation has become an essential methodology for companies trying to grow their business and improve their bottom line.

Traditionally, companies have ran randomized A/B tests with just two variants (the baseline/control and treatment), looking to find a single statistically significant winner. Over time, rather than limiting the experiment to just two variations, marketers have started layering in additional segments to target, ensuring they are getting the most out of their experiments.

However, although many companies today conduct controlled experiments on a regular basis, many are not satisfied with uplifts and the time it takes to reach valid results. As the pressure increases to deliver improved results through site optimization and testing, marketers are constantly pushing the boundaries of existing methodologies.

To understand the limitations and even push beyond them, I will examine how traditional A/B testing works and how both science and technology have broadened the scope of optimization abilities.

Rethinking Basic Testing Methodology

For most companies today, doing A/B/n testing alongside its multivariate cousin is ubiquitous. For quite some time these methodologies have been the only approaches available to conduct testing on websites, although many limitations apply.

For example, while these approaches offer some level of testing flexibility, they are heavily dependent on finding a single best-performing (“winning”) variation. Marketers are now challenging the status quo by asking:

- Why do we have to wait for sufficient sample size and high statistical significance, only to then manually stop the experiment instead of having a mechanism that can converge smoothly and automatically towards the best performing variation?

- How can we automatically leverage huge amounts of data for improved and optimized results?

- How can we help our organizations meet the rising bar of customer expectations for highly relevant content?

- Why do we have to settle for a single “winning” content variation when we should be able to serve multiple, targeted variations based on the preferences and online behavior of individual customers?

- Why run experiments for maximizing goal conversions instead of directly maximizing online revenue?

- On the technical front, how can we reduce operational and managerial costs and achieve powerful scale, agility, and speed?

These are not simple questions to answer. However, after working their way through them, many realize that classic testing methodologies simply cannot provide the solution.

The Bandit Approach

A multi armed bandit

A multi armed banditIn traditional A/B testing methodologies, traffic is evenly split between two variations (both get 50%). Multi-armed bandits allow you to dynamically allocate traffic to variations that are performing well while allocating less and less traffic to underperforming variations. Multi-armed bandits are known to produce faster results since there’s no need to wait for a single winning variation.

Bandit algorithms go beyond classic A/B/n testing, conveying a large number of algorithms to tackle different problems, all for the sake of achieving the best results possible. With the help of a relevant user data stream, multi-armed bandits can become context-based. Contextual bandit algorithms rely on an incoming stream of user context data, either historical or fresh, which can be used to make better algorithmic decisions in real-time.

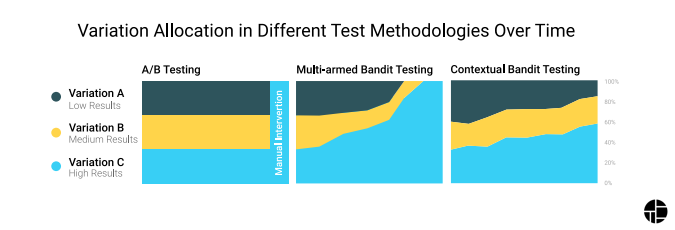

Below is a visualization showing how the classic A/B/n testing approach would split the traffic between several variations (three in this case) compared to the multi-armed bandit and contextual bandit testing approaches.

This visualization shows how bandit tests can yield better results over time. While the classic approach requires manual intervention once a statistically significant winner is found, bandit algorithms learn as you test, ensuring dynamic allocation of traffic for each variation.

Contextual bandit algorithms change the population exposed to each variation to maximize variation performance, so there isn’t a single winning variation. Similarly, there isn’t even a single losing variation.

At the core of it all is the notion that each person may react differently to different content. For example, a classic A/B test for promotional content from a fashion retail site that consists of 80% female customers would come up with the false conclusion that the best performing content would be promotions only targeted for females, although 20% of customers expect to have promotions targeted to males.

In many cases, Bandit algorithms represent a new approach for achieving sustainable value more quickly and efficiently. With the limitations of traditional A/B/n testing in mind, it offers a new way to conduct segmented experiments.

In particular, this approach allows marketers to:

- Dynamically deliver the best performing variation at the user level, ensuring each customer sees the best performing, personalized content variation instead of a generic, one-size-fits-all (and therefore misleading) winner.

- Directly optimize experiments for maximum revenue-based performance.

- Migrate from a static A/B/n approach to a flexible platform that can automatically fine-tune every element in order to maximize value.

- Gain full control over every piece of data in real-time, allowing for better, more effective data-driven decisions.

- Run complicated optimization initiatives with minimum need for IT, even when setting up complex experiments in ever-changing dynamic environments.

Armed with the right set of tools and testing models, marketers can harness the power of data to leverage historical and behavioral data per user, ultimately leading to more successful optimization initiatives.