The impact of A/B tests and personalization on SEO

Read about Google's official SEO advice on A/B or multivariate testing, and how to minimize the possible impact of A/B testing on SEO.

Summarize this articleHere’s what you need to know:

- A/B testing and personalization can be valuable for SEO, but it’s crucial to implement them correctly to avoid penalization from Google.

- Google advises treating search engine bots like human visitors during A/B testing and personalization.

- Use temporary redirects (302) instead of permanent ones (301) and leverage canonical tags or robots.txt to address duplicate content issues.

- While Googlebot can crawl and index JavaScript-generated content, it’s best to keep important SEO content in the static source code for optimal visibility.

- Dynamic Yield’s tests indicate that Googlebot crawls and indexes all JavaScript-based content, but it renders as plain text in search results.

Optimizing and personalizing the customer experience on a regular basis to improve KPIs serves as an inherently valuable growth tool and is a key part of the digital marketing ecosystem for any online business. However, a common question that arises is whether these efforts can negatively impact Search Engine Optimization (SEO) rankings.

With a few misconceptions floating around on the topic, we’re going to dive into the various concerns, providing solid references to Google’s official stance, as well as highlight practical guidelines to help you become more relaxed with, and more in control of, your experience optimization campaigns. We’ll also share the results of experiments we conducted here at Dynamic Yield to determine how dynamic Java-Script-generated content affects SEO, including Google crawling and indexation.

Before we jump in, some comments:

- This article refers only to JavaScript-based dynamic content rendered through client-side implementation. (A/B testing and personalization can be rendered both client- and server-side solutions, which you can read about here).

- If you want to learn about Google’s Intrusive Interstitials Penalty, read this article: The impact of popups, overlays, and interstitials on SEO.

Now let’s get started.

Can A/B tests or on-site personalization wreck your Google ranking?

Google encourages website owners and marketers to conduct constructive testing and ongoing optimizations. If it didn’t, it wouldn’t offer a platform of its own for this exact purpose. The general idea is that personalization doesn’t work against you – but the other way around. By improving digital experiences, businesses can improve engagement metrics, increase loyalty, and eventually, build a stronger brand. As John Mueller, Google’s Webmaster Trends Analyst said in a Reddit AMA:

“Personalization is fine, sometimes it can make a lot of sense.”

But while Google acknowledges these efforts improve the overall customer experience, transforming websites for the better, from a technical point of view, there are four main risks that need to be taken into consideration when optimizing or personalizing web pages.

- Cloaking

- Redirection

- Duplication

- Performance

Risk #1: Cloaking

Cloaking involves presenting one version of a page to search engine bots while showcasing a different version to the users interacting with its. This is done for the purpose of manipulating and improving organic rankings and is a huge SEO risk, which is seen as a harsh foul in the eyes of Google’s official Webmaster Guidelines. Running optimization initiatives that target specific variations to search engine User- Agents (such as “GoogleBot”) and another to human visitors is what’s considered cloaking – a big no-no.

As a strict principle, you should always treat Googlebot and other search bots the same way you treat real human visitors. So unless you’re intentionally targeting Googlebot with one content, while showing other content to real users, your optimization and personalization campaigns will never be considered cloaking manipulations.

“Ideally you would treat Googlebot the same as any other user-group that you deal with in your testing. You shouldn’t special-case Googlebot on its own, that would be considered cloaking.”

– John Mueller, Google

Risk #2: Wrong Type of Redirect

When redirecting visitors to separate URL variations (such as with split tests), Google’s official guidelines instruct to always use a temporary HTTP response status code (302 Found)

or a JavaScript-based redirect. As a general rule of thumb, never use a 301 Moved Permanently HTTP response code. The 302 redirect request will help search engines understand the redirect is temporary, and that the original, canonical URL should remain indexed.

Risk #3: URL and Content Duplications

URL Duplications – Sometimes, when running online experiments, the system duplicates some of the site’s URLs for different test variations. From an SEO point of view, these on-site duplications are a relatively minor risk in terms of a real penalty, unless initially created as an attempt to manipulate organic rankings through cloaking. Nevertheless, to remove the possible risk, we recommend a fairly easy fix – implementing a Canonical tag element on each duplicated URL. It is also possible to block access to those duplications using a simple Robots.txt Disallow command, or a Robots meta tag with a noindex value.

Content Duplications – With on-site optimization and personalization, websites deliver different content variations tailored to different individuals. When the original content plays a big part in determining a web page’s organic rankings, it is vital to keep this content on-site, instead of replacing it with fully dynamically generated content. In addition, keeping the original content can also act as a failover mechanism for unsupported test groups or individuals.

As Google has gotten smart, its crawling and indexing algorithms can render and understand (to some extent) our JavaScript-based Dynamic Content tags – even indexing the content.

Risk #4: Web Loading Performance

Site speed is a major deal-breaker when it comes to user experience. It has also been incorporated in Google’s organic web search ranking algorithms since 2010, among 200+ other ranking signals. Some users are concerned that optimization and personalization tools may negatively impact the loading time of their webpages, and the truth of the matter is that it might sometimes. But there are many ways of minimizing the effect.

- Set the tracking script to run asynchronously – As such, it is loaded simultaneously and has the advantage of not slowing the page down. Although loading a script asynchronously has the advantage of not slowing the page down, it can cause a “flicker” on the page, where the original page loads, followed shortly by the variation page. Dynamic Yield for example, uses a synchronous snippet to prevent flickering and make sure the impact on the page is minimized.

- Configure DNS Preconnect/prefetch – Implement DNS-prefetch and preconnect tags for an extra boost on non-IE browsers. Preconnect allows the browser to set up early connections before an HTTP request is actually sent to the server. This includes DNS lookups, TLS negotiations, and TCP handshakes. This, in turn, eliminates roundtrip latency and saves time for users.

- Use lazy loading – Enable lazy loading (which is supported out-of-the-box in Dynamic Yield) to allow experiments to run asynchronous (e.g. below the fold).

- Utilize own hosted CDN – To make sure your site won’t have an additional external dependency. You can choose the CDN vendor that matches your current contract with your CDN and ensures that there are servers as close to your visitors as possible. This will allow your own servers to optimize loading time, as they have full control over the content that is being served. At Dynamic Yield for example, we work with Amazon Cloud Servers and deliver content directly through its CDN servers. We can also send content to your company’s own CDN if needed.

- Move to server-side – Personalization and A/B testing can be deployed server-side, and not just client-side. When implemented server-side, there’s no impact whatsoever on performance. Dynamic Yield for example supports both server- and client-side deployments. Server-side APIs allow you to integrate personalization as a core part of your technology stack and avoid “flickers” and accelerates page load.

JavaScript-rendered experiences, and rankings

Google uses some kind of a headless browser to render JavaScript code and effectively imitate real users’ browsing experiences. They use this technology because they try to fully understand the user behavior (compared to the old days, when they had only used “simple” bots to fetch text, media, and links from web pages). With that said, it’s not clear to what extent can Google (and do they) emulate different sessions and user characteristics, and thus identify each personalized experience or testing variation. For example, will Googlebot crawl the same page from different countries, to try and identify location-based experiences? Probably not, although in theory, they can do this when needed. While Googlebot is mostly US-based, they claim to also sometimes do local crawling – the only way to actually see would be to analyze the server’s logs.

In the past, Google released an official statement about their abilities to render JavaScripts, saying that “sometimes things don’t go perfectly during rendering, which may negatively impact search results for your site.” That’s why, when generating dynamic content variations, it is strongly advised to include the original static content in the source code.

Therefore, I wouldn’t use a personalization platform to dynamically inject content that is important for SEO. Instead, I’d make sure that content that is important for SEO, appears in the static source code of the pages. By doing that, you ensure that non-personalized user experiences or users without JS-enabled browsers will get the default, fallback content. You also ensure that most bots, Googlebot included, will be exposed to the content that is important for your SEO strategy. It’s safe to assume that, generally speaking, Google’s ranking algorithms rely on the default, static version of a page – instead of on the potentially unlimited amount of personalized variations that a given page can have.

As the impact of JavaScript-injected content on sites’ SEO has yet to be comprehensively determined, we tested how Googlebot crawls and indexes JavaScript-based content.

Below is a summary of the experiments conducted and how our Dynamic Content capabilities, which personalize experiences for visitors by dynamically replacing the content, design, and layout, affect a site’s SEO, including crawling and indexation.

Tests – six different client-side implementations

Method – Dynamic Content (inline), Dynamic Content (placement), Dynamic Content (Inline Placement), Dynamic Content (Custom Action JS), Recommendations (Placement), Recommendations (Inline-Placement)

Validation – unique strings used to identify dynamic content in (Google) search results

So, can Google crawl and index JavaScript-based content?

Conclusion – Yes, Google is able to crawl and index all JavaScript-based content

Content generated using JavaScript (Dynamic Content), custom actions, and algorithm-driven recommendations are shown as plain text in Google’s search engine results page (SERP). Results of which were valid for all implementation methods tested.

Take a look a deeper look into the JavaScript SEO experiment by visiting dynamicyield.com/seo.

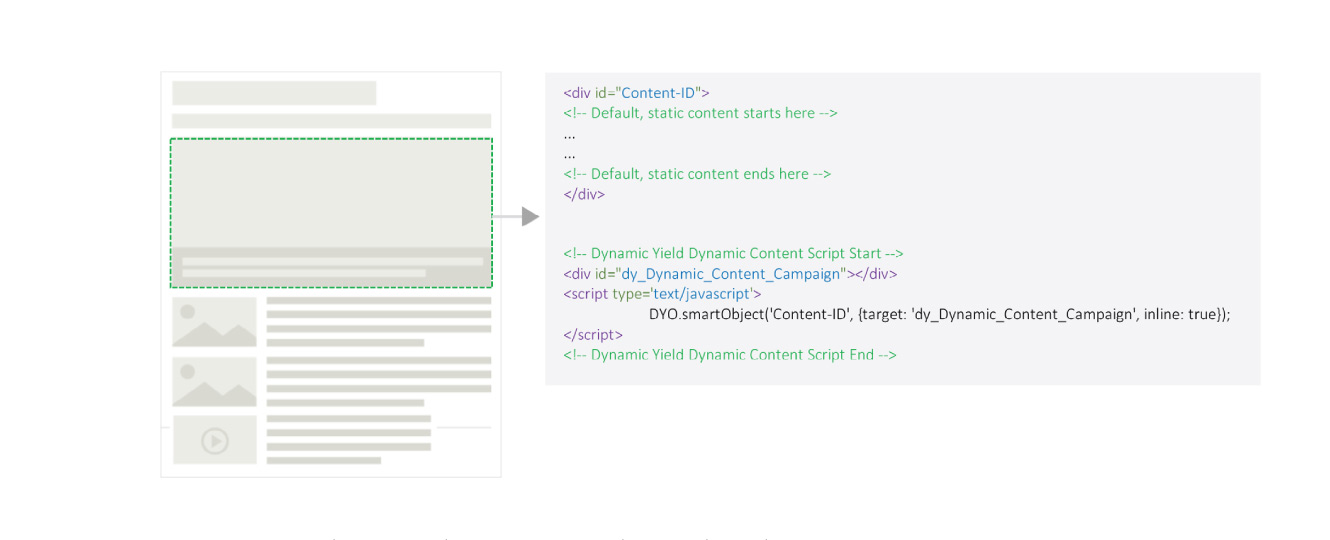

Example of a client-side SEO-friendly optimization

Wrap the static content inside a container with a specific CSS Selector and use Dynamic Content to replace the static content located within the specific CSS Selector with new, dynamically-generated content variations.

The Dynamic Content tags will then be used to personalize experiences for visitors by dynamically replacing the content, design, and layout.

Organic rankings are not affected since Googlebot is served with the static version of the page.

To Summarize

Google acknowledges the use of A/B testing and personalization on the customer experience, crawling and indexing JavaScript-generated content. Marketers concerned about its impact on SEO simply need to utilize best practices during testing and experimentation in order to avoid any unnecessary penalties. And hopefully, this article has provided the resources for teams to be able to do so confidently.