How to never run out of conversion rate optimization ideas

Learn what to do when your A/B testing routine starts to feel stale and you're struggling to identify ideas for new experiments and CRO campaigns. Here are six proven ways to get great results.

Summarize this articleHere’s what you need to know:

- Move beyond low-hanging fruit by systematically brainstorming new A/B testing ideas using an idea generation matrix that lists all possible testing categories and elements.

- Involve your team in the brainstorming process to generate a wider range of ideas and perspectives.

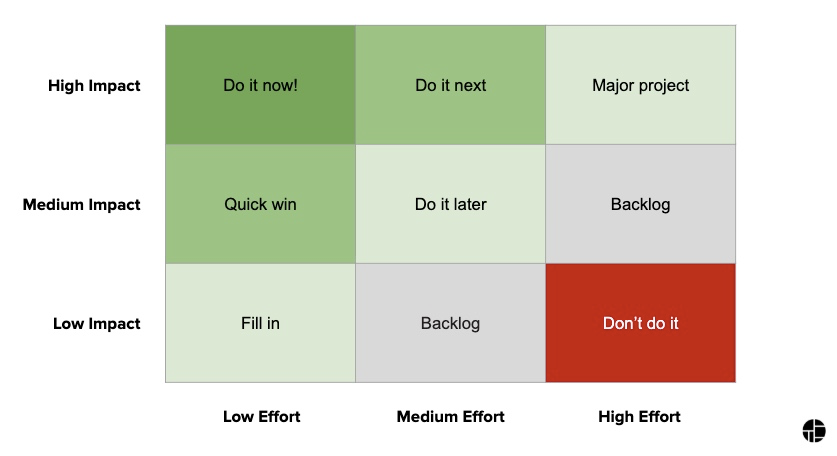

- Prioritize A/B testing ideas using a framework like the Impact-Effort Prioritization Matrix to focus on those with the highest potential return on investment.

- Ensure each A/B test has a clear purpose and answers five basic questions: What, Who, Where, When, and Why. This will help you design more focused and effective tests.

- Don’t neglect A/B testing emails, even though they have fewer testable elements than websites. You can still glean valuable insights and optimize email performance through A/B testing.

- Build a library of effective email subject lines through A/B testing to consistently capture attention and improve email open rates.

When companies first start A/B testing their website, email, and other channels, it usually doesn’t take long for them to see big results. Just changing the placement, size, and color of their CTA buttons, for example, can yield huge improvements to their conversion rates. There’s a lot of excitement about conversion rate optimization in those first few months, but over time the momentum and enthusiasm driving all that testing starts to slow down.

One of the most common reasons that A/B testing programs lose steam is that the people who create the tests start to run out of ideas. This can happen quickly, as marketers and analysts tend to start with the lowest-hanging fruit. With the most obvious test ideas out of the way, it’s a little more challenging to know what kinds of things to test next.

People also have their own preferences and biases when it comes to testing priorities. A particular analyst or marketer might be a highly visual person, for instance, and tend to only think about tests that involve visual elements like color variants or layout. Another person might focus on the content of the website or email, A/B testing alternate site copy, headlines, or offers. Other people think in structural terms and focus on elements like which form requirements generate the highest number of conversions.

None of these are bad ideas for A/B tests, but there are only so many variants of each kind that are worth exploring. Eventually, people who stick to these same testing ideas will get to the point where they start to see diminishing returns from their tests. The gains in conversions and other metrics become smaller and smaller, making it seem like the A/B tests aren’t generating worthwhile results. To generate new and exciting results again, they need to try new kinds of tests.

When your A/B testing routine starts to feel stale, it’s time to try something new. Here is a process that may help you ideate and get great results.

1. Create an idea generation matrix

One of the most helpful starting points for reviving your A/B testing strategy is to create a list of all the different broad categories that you have the ability to test. Then, combine that list with all the kinds of tests you can run. This testing matrix allows you to quickly remind yourself of what elements can be tested, and what kinds of tests you have available to test them.

For websites, these categories might include:

- UX, layout, and design elements

- Call-to-action button size, copy, and color change

- Promotional offers and incentives

- Site copy and messaging

- Social proof, product demand alerts, and scarcity messaging

- Page functionality

- Recommendation algorithms and strategies

- Navigational menus

This allows you to create tests easily, and to quickly identify testing categories that you might have overlooked.

2. Get as many people involved in the process as possible

If your testing team is small — often, it’s just one or two people — coming up with new ideas for A/B tests can quickly become a chore. At the same time, there may be other people in marketing, sales, and management, who have tons of ideas they would love to try.

Get as many of these people engaged in the process as possible. This can be an informal conversation, such as a group email or dedicated Slack Channel, or a weekly or monthly meeting. This team can then discuss new testing ideas, and examine the results of previous tests to consider future iterations and improvements.

And you can always visit Dynamic Yield’s Inspiration Library for more than a hundred personalization examples from real brands to leaf through and kickstart ideation.

3. Prioritize your A/B ideas

Some A/B test ideas are better than others. Judging which tests should take priority over others, however, can be a serious challenge when your team includes people from different departments, many of whom will have their own priorities (or outrank each other). One solution to this problem comes from a large American fashion brand:

Once a month, they would gather their digital marketing team together and hand out sticky pads to everyone. They would then spend five or ten minutes brainstorming ideas for tests, one idea per sticky note. This allowed them to quickly create ideas for as many different kinds of tests as possible.

Another idea would be to use our Impact-Effort Prioritization Matrix framework (Read further about the framework and how to develop and prioritize a CRO strategy) to identify high impact and low effort campaign ideas:

Impact-Effort Prioritization Matrix

Lastly, teams can use this handy personalization prioritization template to evaluate and prioritize experimentation ideas.

4. Remember the five basic questions

Every A/B test is an experiment, and that experiment should answer a specific question. In creating that experiment, however, you should always be able to answer the five basic questions about what you are hoping to learn. We all know these questions: What, Who, Where, When, and Why?

- What specific concept or preference is being tested?

- Who is the audience? Are all visitors being tested, or just some select segment? You should be using different tests for different audiences.

- Where on the site are you testing, and under what circumstances? Is it the homepage, or are you limiting it to targeted landing pages? Why is this test happening in this specific location?

- When will the test run? Some tests make more sense at specific times. Some tests may generate the same results no matter when they happen, while others will only provide meaningful insights if they happen during peak site hours, on the weekend, or during the holidays.

- Why are you running the test? What is the hypothesis of the test? What will you learn from the test? What results are you hoping for? For instance, are you looking to reduce shopping cart abandonment rates? How will you incorporate what you’ve learned into the site, and into the next round of tests?

There’s more to these tests than simply seeing what happens when you change the size of headline or replace the default product image. Your real goal should be to use the data from your tests to create an overarching theory about how these elements come together to generate the results you are looking for.

5. Don’t forget to A/B test your emails

When compared to websites, emails only have a handful of testable elements. As a result, many people have come to believe that A/B testing emails isn’t a good use of resources. That’s wrong.

Email is actually one of the fastest and easiest ways to see real results from A/B testing. It’s a perfect sandbox to test out segmentation strategies, and even seemingly crazy ideas.

6: Create an A/B email testing arsenal

While it’s certainly worth A/B testing other aspects of your emails, for example, the focus should often be on the subject lines. Once people open the emails, you have more options, but there’s no getting around the reality that most of the testing should be focused on that one crucial element, especially if you have a smaller mailing list. Unfortunately, there are only so many ways to write an effective subject line. This makes A/B testing of those subject lines an essential part of the process.

What you’re really doing with email A/B testing is building up an arsenal of ideas. If your open rates start to slip, you can use these ideas to shake the recipients out of their rut. It’s not about finding the “one true email subject line” that will always have the highest open rates. It doesn’t work that way. It might work for a few emails, but eventually, the recipients will get used to the new format and lose their curiosity. Your email open rates will then go back to where they were before.

Let’s say that you have an arsenal of three dozen techniques that have been proven to work through A/B testing. Some will be more effective than others, but they all have a statistically relevant effect on open rates. If you only send an email every other week, you have more than a year’s worth of ideas to try.

To help you with this, here’s a simple (but hopefully effective) conversion rate optimization and a/b testing tracker that should help you categories your ideas, come up with new ones, and maintain a list of results for posterity:

Click here, or the image above to access.

Wrapping Up

Remember, the purpose of A/B testing is to learn things quickly, and to gather data you can put to immediate use. Over time, your A/B tests should become laser focused and increasingly advanced. They should move beyond things like basic design elements, and move into more complex categories like site functionality. Before long, you’ll be able to A/B test everything, gathering crucial insights into product suggestion algorithms, pre-orders, user personalization, customer surveys, and segment-specific changes. This can even apply to your mobile site, such as the “one-click buy” versus “swipe to buy” tests that Amazon has been running on mobile users lately.

There’s no reason to let your A/B testing become stagnant. Anything and everything about your site, your email, your social media, and even your customers’ behaviors, can be tested. The more you test and learn, the better your results.