Why A/B Testing and Personalization Are More Powerful Together

While reflective of two distinct approaches to improving the user experience, their combination can offer exponential benefits businesses don’t want to miss out on.

Summarize this articleHere’s what you need to know:

- A/B testing and personalization, when combined, can significantly improve user experience by delivering the most relevant experience to each individual.

- A/B testing helps identify the best performing variations of creative elements, while personalization tailors the experience to individual customers.

- Combining these approaches streamlines processes, amplifies results, and boosts customer satisfaction.

- Marketers can leverage this power by segmenting audiences for A/B testing, testing personalization strategies, and using real-time data for continuous optimization.

A/B testing and personalization represent two distinct methodologies. While A/B testing focuses on experimenting with different creative elements, copy, layouts, and even algorithms to improve key business metrics as well as that of the overall user experience, personalization aims to match the most relevant experience to an individual customer at the right moment in time.

Though fundamentally different in approach, combining these two strategies can actually offer exponential benefits. In this post, we’ll explore why that is, as well as how teams can go about doing so.

A/B testing’s limitations

The premise of A/B testing is simple:

Compare two (or more) different versions of something to see which performs better and then deploy the winner to all users for the most optimal overall experience.

The practice of A/B testing and CRO teams has thus been to invest significantly in launching all sorts of experiments to improve different areas and experiences across the site, native app, email, or any other digital channel and then continuously optimize them to drive incremental uplift in conversions and specific KPIs as time goes on.

However, unless a company generates tons of traffic and has a huge digital landscape from which to experiment, there may come a point of diminishing returns where the output of experimentation (no matter how many tests or how big and sophisticated an experiment may be) reaches a maximum yield in terms of the input from these teams.

This largely has to do with the fact that the classic approach to A/B testing offers a binary view of visitor preferences and often fails to capture the full range of factors and behavior that define who they are as individuals.

Moreover, A/B tests yield generalized results based on a segment’s majority preferences. And while a brand may find a particular experience to yield more revenue on average, deploying it to all users would be a disservice to a significant portion of consumers with different preferences.

Let me illustrate with a few examples:

If the net worth of both myself and Warren Buffet was $117.3 billion USD on average, would it make sense to recommend the same products to us?

Probably not.

Or how about if a retailer that serves both men’s and women’s products decides to run a classic A/B test on their homepage to identify the top-performing hero banner variation, but since 70% of their audience is women, the women’s variation outperforms the men’s.

This test would suggest that the women’s hero banner be applied to the entire population, but it surely wouldn’t be the right decision.

To put it simply:

- Averages are often misleading when used to compare different user groups

- The best-performing variation changes for each customer segment and user

- Results can also be influenced by contextual factors like geo, weather, and more

This is not to say, of course, that there isn’t a time and place for leveraging more generalized results. For instance, if you were testing a new website or app design, it would make sense to aim for one consistent UI that worked best on average vs. dozens, hundreds, or even thousands of UI variations for different users.

However, the days of faithfully taking a “winner-take-all” approach to the layout of a page, messaging, content, recommendations, offers, and other creative elements are over – and that’s okay because it means no longer will money be left on the table from the missed personalization opportunities associated with not delivering the best variation to each individual user.

Unlocking greater relevance with personalization

Personalization is all about responding to and adjusting the site experience to consumers depending on their unique behavior, preferences, and intent, which has become an expectation in today’s digital landscape. This alone is proven to boost customer satisfaction and loyalty.

And while not necessarily dependent on A/B testing, it might come as a surprise that best practice in personalization is based on foundations of A/B testing – the only difference being determining which version of a particular experience works best is done at the audience level vs. on average.

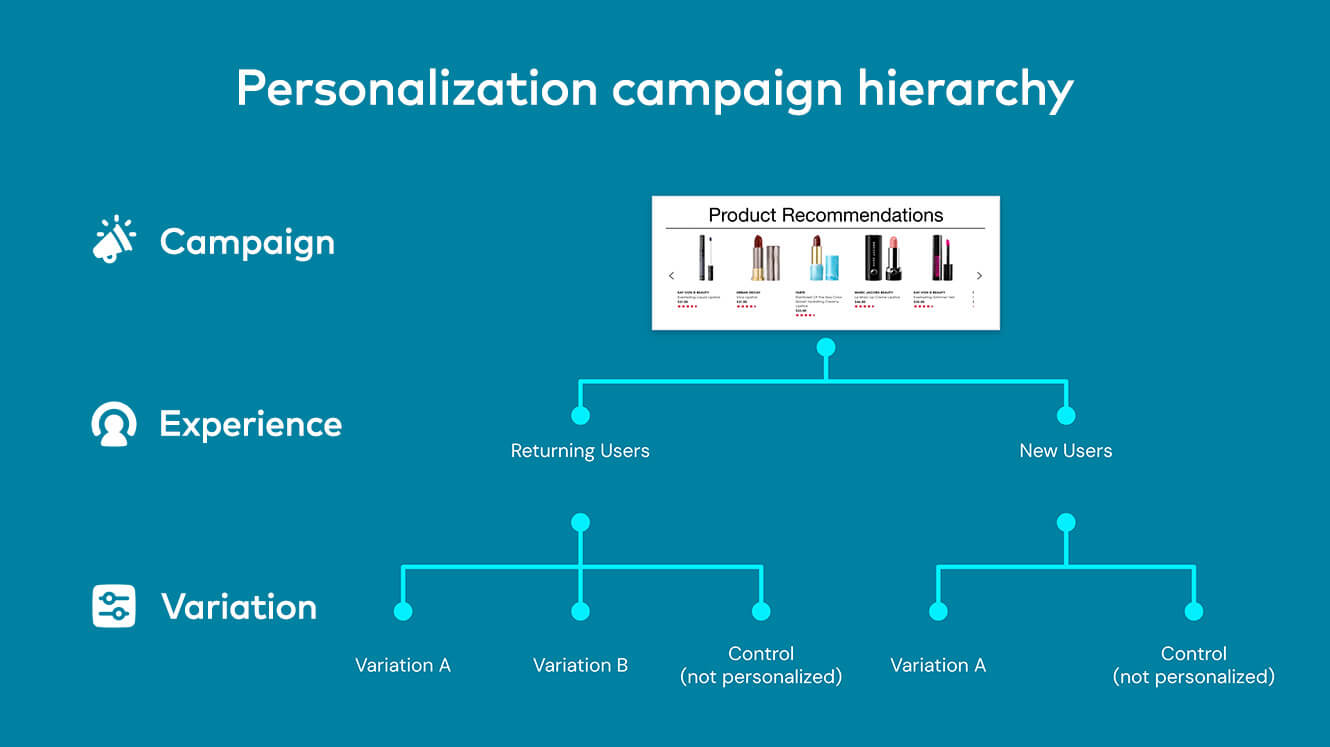

Let’s break down the basic structure of what this would look like within a personalization campaign. Instead of including one experience with multiple variations to compare against a control group, like that of a traditional A/B test, we go a step further with the creation of multiple experiences targeted at different audiences and multiple variations within each that can be A/B tested to determine the best performing one.

This can be done through simple rule-based targeting, which utilizes IF/Then logic to tailor the customer journey according to a set of manually programmed rules, with teams able to A/B test these experiences, validate their results upon reaching statistical significance, and then iterate accordingly.

However, AI and machine learning have become table stakes when it comes to scaling personalization decision-making, as the above scenario can become a data-heavy process that involves numerous test deployments with granular measurements of every tested variation against each audience segment to determine the optimal programmatic targeting rules. It is also helpful in converting “losing” tests into personalization opportunities for specific variations identified to perform better for a particular audience.

These advanced technologies analyze the performance of each variation across every traffic segment in real time to serve the most relevant content to select audience groups. Further, 1:1 personalization can be accomplished with affinity-based personalization capabilities, which leverage the process of affinity profiling to algorithmically match each person with personalized recommendations, product offers, and content.

This level of personalization allows companies to be more effective and targeted with their marketing strategy while also engaging consumers in a more nuanced, meaningful, and relevant way.

Coupling A/B testing with personalization

If you asked an A/B testing or CRO team and those dedicated to personalization more about what the specifics of their work involved, you would find their answers to be eerily similar.

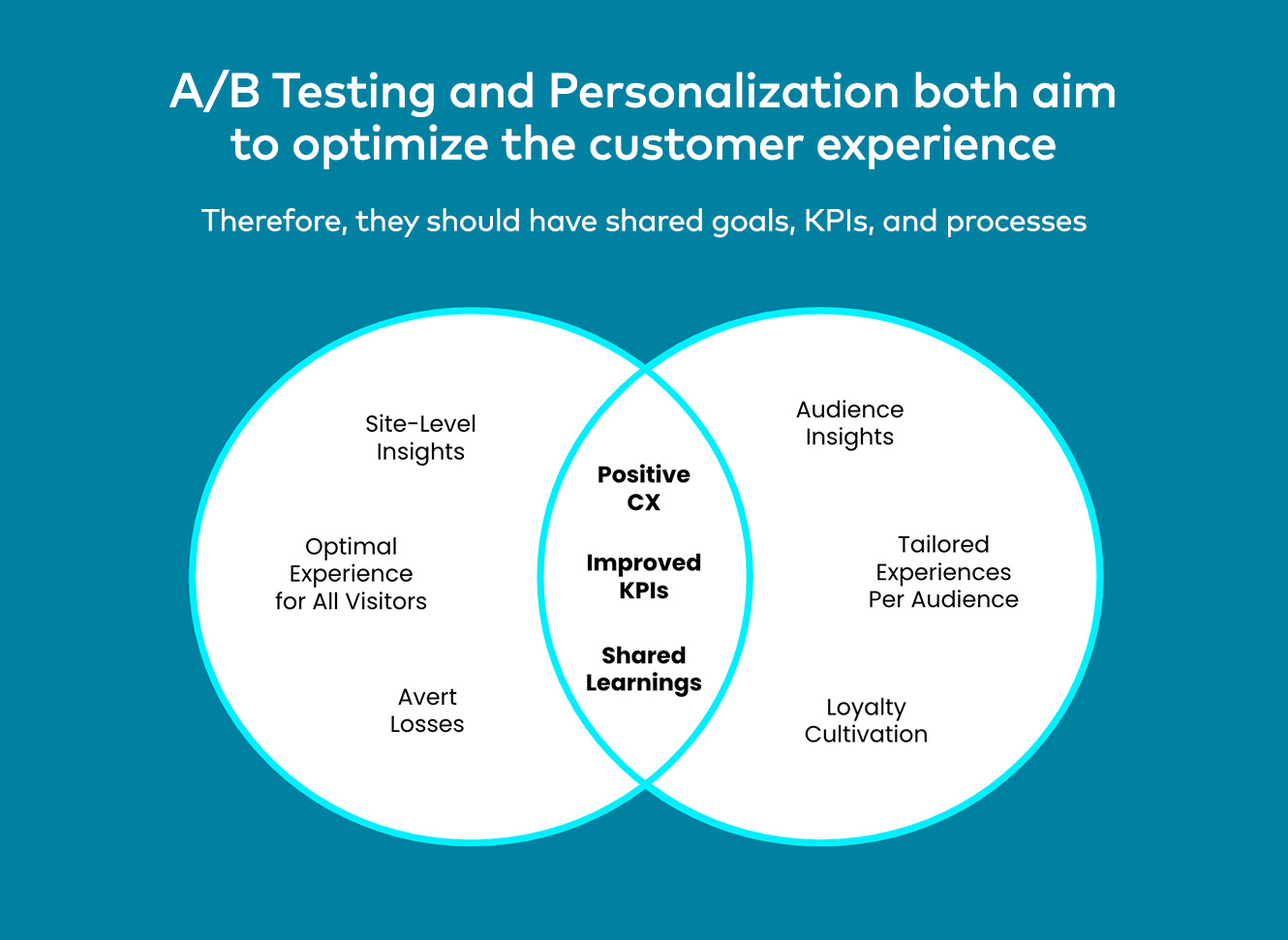

Take the eye-opening diagram below, which was depicted during a keynote from JD Sports | Finish Line during a Personalization Pioneers event (full recap here):

It is a sentiment shared by many others who are beginning to realize that both A/B testing and personalization:

- Share a focus on creating a positive customer experience

- Are looking to influence and improve the same KPIs

- Can benefit from the same collected learnings

Additionally, oftentimes, these teams require the same internal resources and even tools! This is why it’s so important that A/B testing and personalization do not live in a vacuum, and instead, become part of a shared roadmap with aligned KPIs.

The combination of the two can not only streamline processes and operations but also generate exponential results because it allows for both broad and fine-tuned insights into consumer behavior.

Here’s how you can incorporate their combined power into your marketing strategy for better results:

1. Segment-Based A/B Testing

Instead of performing A/B tests on your entire audience, divide your audience into meaningful segments based on shared characteristics (try the Primary Audiences approach, which is meant to scale from macro to micro). Then, perform A/B tests on these segments. This segmented experimentation approach can provide a more nuanced understanding of different consumer behaviors and help tailor experiences to specific groups.

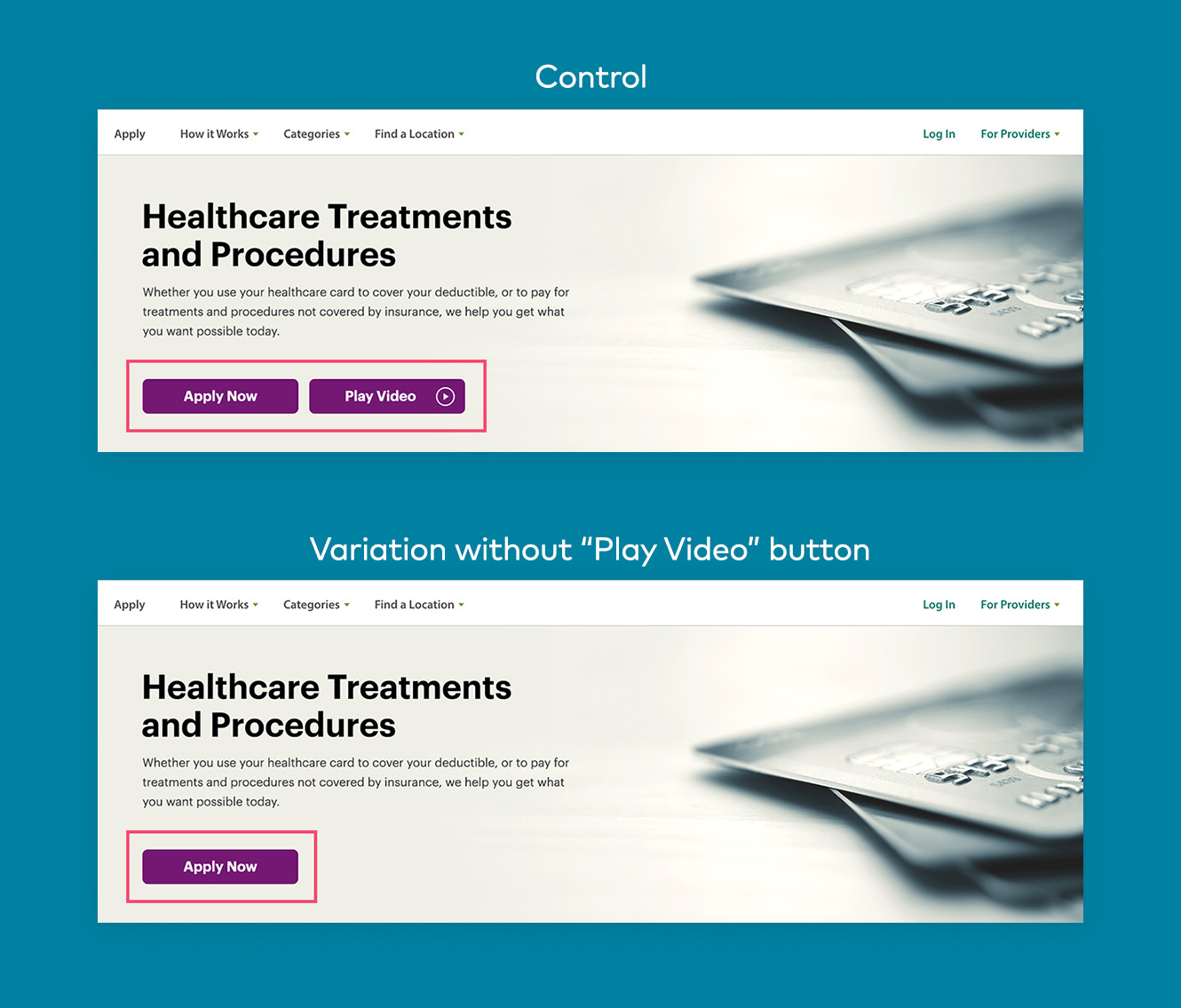

For example, Synchrony increased its application submission rate by 4.5% among high-intent users by running an experiment for this segment that tested the removal of extraneous call-to-action buttons from the banner.

Upon analysis, the company noticed one specific UX change – removing the “Play Video” CTA button from its banner – stopped high-intent users from getting distracted, enabling them to actually learn more about Synchrony’s numerous services.

2. A/B Test Personalization Strategies

Use A/B testing to determine which personalization strategies work best. For instance, you might test product recommendation algorithms and whether those that are targeted to certain audiences lead to better click-through or add-to-cart rates than others.

3. Real-Time Strategy Adaptation

As you gather data from your A/B tests, use this information for the continuous optimization and refinement of your personalization strategy. This real-time adaptation allows for a more dynamic and effective marketing strategy that continually evolves to meet consumer needs.

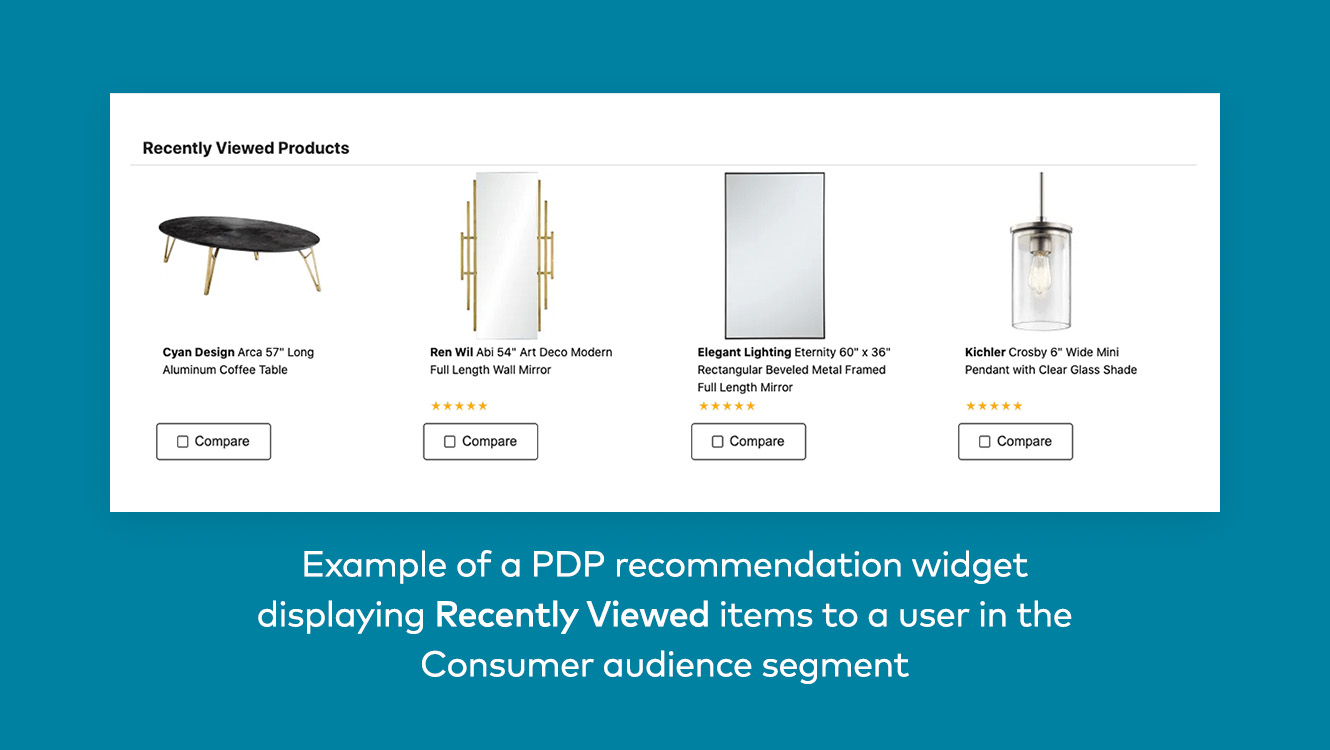

For example, Build with Ferguson generated an 89% uplift in purchases from recommendations by doing this, which first started with implementing an audience-first strategy (based on the Rooted Personalization framework).

The team tested various recommendation strategies and ultimately found out that its ‘Consumer’ audience segment tended to engage with recommended items that other users with similar behaviors and interests have interacted with.

Using these findings, Build with Ferguson optimized the performance of its recommendations across the site and also discovered that users who interact with recommendations spend 13% more and purchase 2.4 more items on average.

A/B testing and personalization – natural extensions of one another

A/B testing has historically been about determining the best experience overall, while personalization aims to provide the best experience at an audience or individual level. And while there is a time and a place for both, combining the two can translate into increased customer satisfaction and loyalty for businesses – with key experiences made more relevant through personalization and maximum results yielded per strategy via their A/B testing.

P.S. For more information on how these two practices come together, I suggest you check out this A/B Testing & Optimization course, which touches on how to set up the right attribution configurations, select the right objective, analyze personalization test results, and ensure each one yields meaningful results.

Building the Right "It": How Pretotyping Guides Product Decisions with Concrete Data

Building the Right "It": How Pretotyping Guides Product Decisions with Concrete Data