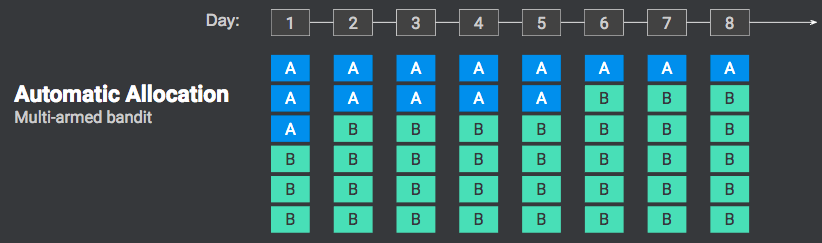

In traditional A/B testing methodologies, traffic is evenly split between two or more variations. A multi-armed bandit approach allows you to dynamically allocate traffic to variations that are performing well while allocating less and less traffic to underperforming variations. This testing approach is known to produce faster results since there’s no need to wait for a single winning variation.

The Multi Arm Bandit, also known as “reweight”, is one of Dynamic Yield’s machine-learning-based optimization algorithms used for automatic traffic allocation between variations, which shifts traffic in real-time towards the winning variation.

Optimization: The Bandit Approach

The term “multi-armed bandits” suggests a problem to which several solutions may be applied. Dynamic Yield goes beyond classic A/B/n testing and uses the Bandit Approach to convey a large number of algorithms to tackle different problems, all for the sake of achieving the best results possible.

To illustrate the multi-armed bandit problem, let’s say we are facing 3 slot machines (each costing 25 cents for a turn), and we may, at each turn, pull a single lever. Our goal is to maximize the return. We begin pooling the different levers at a random order and wish to gradually begin exploiting our knowledge of the expected reward from each arm and pull the one with the highest expected reward as measured so far. A large body of literature exists about this problem and many suggested solutions, one approach would be to use a method called “Thompson Sampling,” in which at each turn we choose a variation at random with certain probabilities that we calculate from the data collected so far. If a variation is very likely to be the best one, it is also very likely to be chosen.

Notice that up to now we did not make any use of the vast amount of data we are collecting here at DY for every distinct visitor. In other words, thus far we found a technique that helps us find the globally best variation, without personalization. So, let’s review the data we have and how we make use of it.

In Dynamic Yield, the Multi-Armed Bandit (MAB) algorithm computes the weight and reallocation of traffic every 30 minutes. The following diagram illustrates the traffic allocation behavior over a week’s long experiment between two variations, where a decision is required on the eighth day: