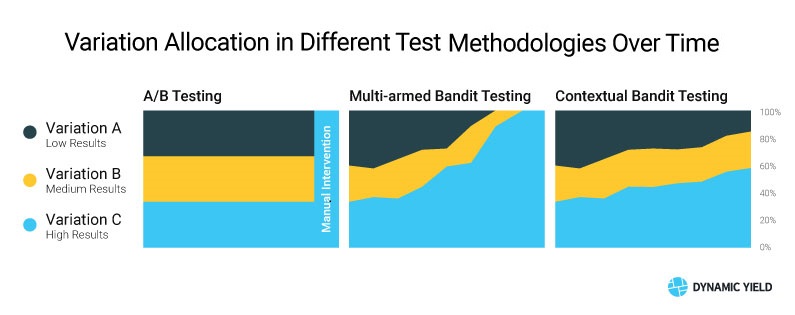

While multi-arm bandit algorithms go beyond classic A/B/n testing, conveying a large number of algorithms to tackle different problems, all for the sake of achieving the best results possible, they can also become contextual. With the help of a relevant user data stream, contextual bandits for website optimization rely on an incoming stream of user context data, either historical or fresh, which can be used to make better algorithmic decisions in real time.

While the classic approach requires manual intervention once a statistically significant winner is found, bandit algorithms learn as you test, ensuring dynamic allocation of traffic for each variation. Contextual bandit algorithms change the population exposed to each variation to maximize variation performance, so there isn’t a single winning variation. Similarly, there isn’t even a single losing variation. At the core of it all is the notion that each person may react differently to different content. For example, a classic A/B test for promotional content from a fashion retail site that consists of 80% female customers would come up with the false conclusion that the best performing content would be promotions only targeted for females, although 20% of customers expect to have promotions targeted to males.

To learn more about Contextual Bandits read our post: A Contextual-Bandit Approach to Website Optimization