Building a personalization framework designed to scale

A scalable personalization framework leveraged by the best in the industry to bring long-term, impactful results to the entire organization.

Summarize this articleHere’s what you need to know:

- A practical framework for building a winning personalization program, including key steps and stakeholders involved.

- Data-driven personalization: Leverage your data to understand your customers and tailor experiences accordingly.

- Six-step framework: Analyze, Brainstorm, Define, Prioritize, Build, and Optimize.

- Continuous testing and iteration: Regularly assess and refine your personalization efforts for lasting success.

Every organization has its own workflows and ways of getting things done when it comes to managing their personalization efforts. Some, who are farther along the personalization maturity ladder, may adhere to rigorous practices around the implementation of individualized experiences while others drive experimentation with less formality and rigidity.

However, there are a number of critical steps all organizations will need to complete in hopes of driving long-term, scalable growth. After talking to a wide range of clients and partners, the following framework emerged, detailing exactly how to effectively deliver on their personalization goals.

A practical personalization framework

Phases | Steps |

Analyze | Insight

|

Brainstorm | Idea

|

Define | Hypothesis & Goal

|

Plan | Prioritize

Define

|

Build

| Develop

QA & Implement

|

Optimize

| Validate

Optimize & Test

|

Each phase signifies the elements that must be ironed out by key stakeholders to effectively launch personalization campaigns. Those individuals, who we have already identified in this post, should be designated according to the expertise required for completing each phase (i.e. any data-related tasks will fall under the Optimization & Analytics role).

By properly pairing activities and communicating responsibilities, key areas of ownership will become clear and high-value outcomes ensured.

Now, let’s walk through what this might look like in practice, and identify all of the hands involved throughout the process of taking an experience live.

Personalization strategy example

Of many personalization examples, in this one, the Lead can be seen closely connected to the entire process. This individual can be directly involved at every stage, from ideation to planning and execution, or just peripherally, providing support or guidance as needed.

Additionally, during stages where more than one person is responsible for the key activities outlined, either of them can act as the orchestrator, pushing the experience along through development. So long as responsibilities are known, the handoff from one stage to the next should be seamless.

This should serve as a basic framework for any organization – customization and refinement are encouraged (and will happen naturally over time and repetition), but seeing as every stage has a distinct purpose, the integrity of the framework must not be compromised.

How to do personalization: step-by-step instructions

Once key stakeholders are aware of their roles and understand the flow, the first attempt to roll out the new process can be made.

1. Analyze your data for insights & opportunities

Role: Optimization & Analytics

While businesses may have similar objectives, e.g. increase revenue, not all use cases are created equal. Some ideas may work for one site and audience, while others do not, which is why diving into the data is necessary to make truly informed decisions about what ends up on your personalization roadmap.

A good place to start is by identifying pain points in a site’s analytics. Try looking for high traffic correlated with poor performance to zone in on areas in need of efficiency.

Search for trends like:

- High bounce/exit rates on a product detail or cart page

- Low session value on mobile devices

- Low session value for a certain age group or gender

- Low session value on weekends or evenings

Alternatively, companies can take an audience-first approach, identifying impactful segments to build experiences around. For example, upon breaking down the site’s traffic, your team might find a large portion of folks coming from a certain traffic source or of a certain demographic.

Try to surface these interesting variables to better conceptualize how you’d like to best serve important groups of visitors:

- Gender

- Product category

- Location

- Traffic source

- Price bands

- Price sensitivity

- Brand loyalty

Now, with a better understanding of the site’s performance and some interesting segments, it’s time to take the opportunities discovered and put them into practice.

2. Brainstorm ideas or concepts for testing

Role: Multidisciplinary

Anything goes!

Eliminate departmental thinking and encourage knowledge sharing by sourcing ideas from all relevant stakeholders and you will never run out of A/B testing ideas. Bring insights gathered from the previous step and discuss new testing opportunities together during regularly scheduled brainstorm sessions.

Start by creating a list of all the different broad categories you have the ability to test. Then, combine that list with all the kinds of tests you can run. This allows teams to stay grounded, reminding them of what’s possible, aka what experiences can be tested and whether the means are available to actually execute them. It may also draw out potential experiments that may have otherwise been overlooked.

Categories might include:

- Banners

- Content

- Layout

- Offers

- Overlays

- Emails

- Push Notifications

- Merchandising Rules

- Messaging

- Recommendation Strategies

Additionally, teams should think long and hard about the “why” behind a particular test as opposed to focusing on the “what.” What homepage banner and treatments will generate the most clicks, for example, will end up producing individual test results that aren’t all that meaningful. A personalization program executed in this fashion will rapidly lose steam.

More on how to do that during the next step.

3. Define a hypothesis and matching KPI for each idea

Role: Marketing & Merchandising Role + Optimization & Analytics Role

This is where it gets fun – hypothesizing changes to the site that would lead to the desired user behavior. A good way to think about building such a hypothesis is:

Based on [OBSERVATION], I believe that if we [PROPOSED CHANGE], we will see an increase in [TEST PRIMARY METRIC].

These statements should not be confused with facts. They are not facts but argued ideas based on data analysis and observations that have already been made. They act as a starting point for the optimization process.

These should be closely tied to the “why” or goal supporting the test. Largely driven by the business’ objectives and it’s online activities, it’s important to explore what the desired outcome of each experience launched is.

For example, the objective of a retail site is to sell products, and as a result, grow the business and increase revenue. With the goal of the website to increase online sales, KPIs can be set accordingly.

The proposed change might be:

- Using social proof on desktop/mobile

- Testing recommendations on PDPs for both desktop/mobile

- Personalizing homepage hero banners

- Triggering behavioral exit-intent popups

- Implementing free shipping threshold notifications

The primary metric might be:

- Conversion rate

- Average order value

- Average revenue per user

- Monthly revenue

- Repeat purchases

- Click-through rate

- Exit rate

On the other hand, if the website was a news publisher with the goal of increasing reader loyalty, possible KPIs might be:

- Bounce rate

- Average pages per visit

- Engagement with article pages (e.g. video play)

- Average time on site

- Percentage of returning visitors

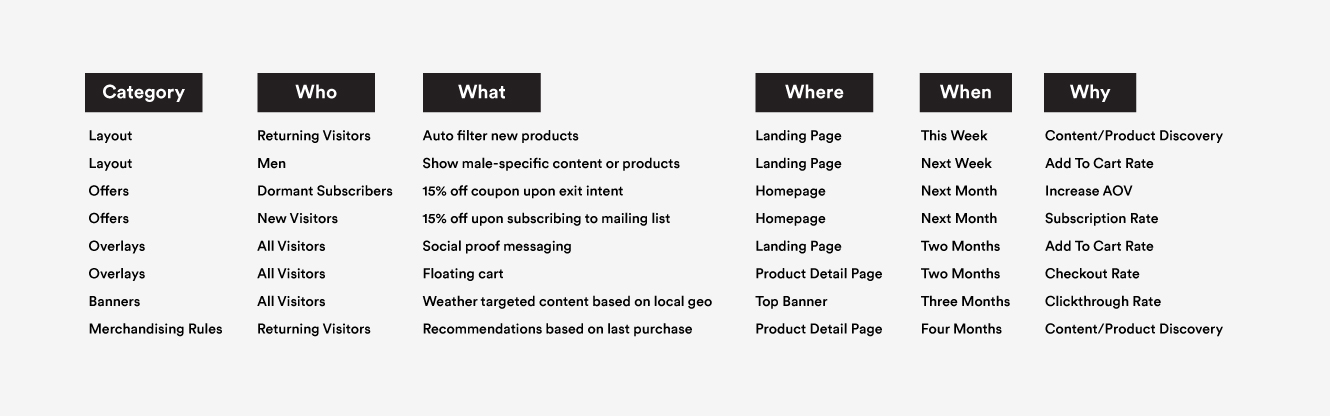

At the end of the last two steps, you should have a list that looks something like this:

With it, ideas can then be vetted for placement on the testing roadmap.

4. Prioritize a collection of potential test ideas

Role: Multidisciplinary

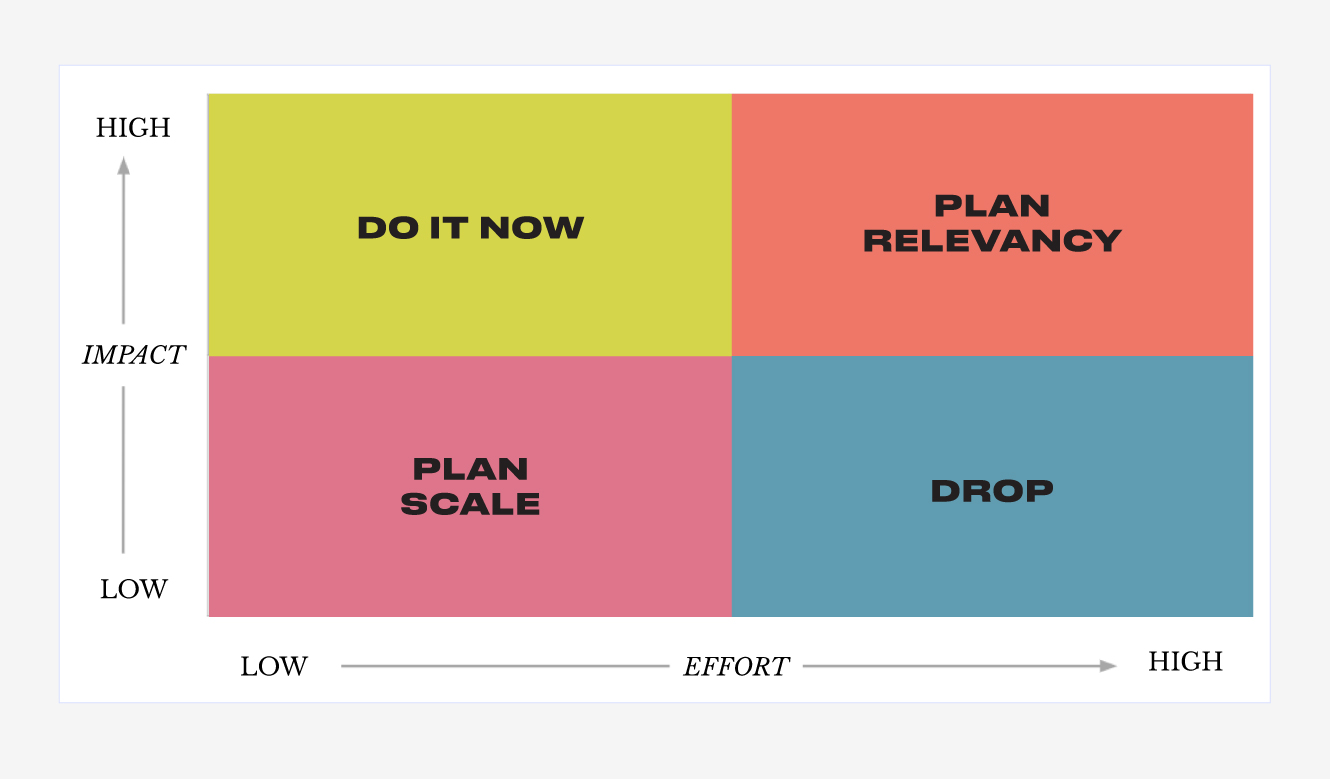

With all of the excitement and speculation around the outcome of a particular experience, it’s easy to get carried away. But with any initiative, it’s crucial to take the time to consider the estimated impact and effort of each initiative before pulling the trigger. Otherwise, valuable time and energy can end up getting wasted on campaigns that are either unrealistic or offer little to no value to the business – both of which can really hurt the long-term sustainability of a personalization program.

The methodology below should be used to aid the decision-making process, ensuring you are able to maximize each campaign.

Impact

Rated holistically, can be measured by Very High, High, or Medium.

- Alignment with company objectives

- Estimated time to impact

- Effect on the purchase funnel

- Level of excitement generated

- Endorsement by an executive sponsor

- Ability to reduce costs

Effort:

Rated holistically, can be measured by High, Medium, or Low.

- Teams involved

- Code or test complexity

- Test definition

- Time to QA

Once you’ve evaluated each of these factors, assess the results and chart each campaign idea using the impact vs. effort matrix below:

For example, a high impact / low effort personalization campaign might be leveraging social proof, which showcases the number of views, adds-to-cart, or purchases on a specific product from other users on a site. Typically used to ease the minds of apprehensive customers and turn them into confident buyers, social proof campaigns require minimal development and are a quick win for all.

As a simple North Star:

- High impact + Low effort = Do it now

- High impact + High effort = Plan for the long-term

- Low impact + Low effort = Plan for a quick win

- Low impact + High effort = Drop

Eventually, you’ll end up with a scorecard for which campaigns to prioritize.

The time, effort, and resources required will largely depend on an organization’s personalization maturity, but whatever the case, prioritizing these tests is key to reducing design and development cycles for the quick and effective implementation of campaigns. If the scope of an experiment doesn’t line up with the testing schedule, deadlines are bound to get missed and the user information associated with a campaign will go stale, resulting in lower-impact results. Not to mention, a poor customer experience.

Use this helpful Prioritization Template for Personalization Program Management to turn a backlog of campaign ideas into a proper action plan.

Once armed with a list of vetted ideas, the specifics of each campaign must be defined in a test brief. This document will be populated by some of the information already established in previous steps, while also outlining important considerations for implementation such as the test parameters, audience, timeline, and so on.

Key stakeholders should work together in the creation of each brief, using it as a point of reference for everything to do with execution – the agreed approach, coordination of schedules, as well as individual responsibilities through launch, validation, and optimization phases.

Then, the building officially begins.

5. Develop the intended user experience

Role: Multidisciplinary

Now, both creative and technical functions must align to create the intended end-user experience, itself. Typically, the following workflow is adopted to first mockup or “stage” how the final experiment will look:

- Copy and CTAs are drafted (Marketing & Merchandising)

- Designs are mocked up (Product)

- The specs are built in HTML, CSS, or Javascript (Development)

This alone can take weeks, sometimes months depending on the efficiency of workflows, which is why creating a culture of experimentation is so important to an organization’s ability to scale a personalization program. Alternatively, templatizing campaign creation can help reduce the design and development cycles and empower marketing to accelerate experience delivery.

Once an experience is developed, it will then go into review, ensuring it meets all of the requirements agreed upon. During this test for quality assurance:

- Any kinks in functionality are noted (Marketing & Merchandising)

- Updates to reflect any requested changes to the experience are made (Development)

- Approval for final implementation is received (Executive Leader)

Of course, further QA cycles may be necessary.

6. Evaluate and optimize

Just as important to the success of a campaign as all the work that led up to launch, upon going live, an experiment must be closely monitored, analyzed, and optimized for performance. And this can only happen after a test first reaches statistical significance.

How long an experiment will have to run in order to achieve valid results will vary based on the sample size and number of variations served, but two-weeks time is recommended at a minimum. And while you may be tempted to attribute changes in KPIs to a specific experience, only once the estimated sample threshold has been hit can one draw a proper conclusion – doing so before then may lead to incorrect decision-making based on inappropriate data.

Consider the following variables when estimating proper sample sizes:

Baseline conversion rate – The current conversion rate (number of successful actions divided by the number of visitors, sessions, or impressions) for the experience being testing.

Expected uplift in conversion rate – The projected % change in conversion rate against the baseline rate. For example, if the baseline conversion rate is 2.5%, a 5% expected uplift would result in a new conversion rate of 2.625%.

Number of variations – The number of variations compared in a single test.

Average sample size per day – The number of visitors to be served in the experiment over the course of one day.

You can also use this Bayesian A/B Test Duration & Sample Size Calculator to help you find out how long you will need to run your experiments to get statistically significant results.

After an experiment officially concludes, valuable insights can then be gleaned to better understand the underlying reasons behind the results for each particular segment of users. These should be logged and used to continue building out various audience profiles, informing the modification and optimization of running campaigns as well as completely new tests.

And then back to step one!

Personalization efforts are cyclical, meaning as one test concludes, companies will find themselves at the beginning of the framework, where they will yet again begin to analyze collected data, brainstorm additional ideas, hypothesize new or improved changes, and so forth.

Each time a team works their way through the experience-building steps above, processes and workflows will become more efficient, creating a well-oiled machine that brings scalable and impactful personalization results to the entire company.

Naturally, over time, programs will fluctuate and adapt to the needs of the organization, but this personalization framework is meant to act as a guiding light for those looking to reassess or improve upon how they operate.