A vital tool for marketers, A/B testing allows businesses to make impactful, data-driven decisions regarding the customer experience. Whenever you set up an A/B/n test with multiple variations, it’s important to determine how you want the traffic to be distributed between the variations. The behavior of each traffic allocation option is as follows:

In a nutshell, with manual allocation, traffic is split evenly between variations until a single winner is declared. De-facto a standard A/B/n test, the assumption is that once results are significant, the test administrator will assign solely the best variation to all visitors.

For example, if you launch a test with four variations, you may decide that all variations should have equal exposure, 25% of traffic each. Alternatively, you can favor certain variations over the other and go for any other combination of allocation rates that amount to 100%, such as 50/20/20/10.

Manual Allocation tests are by definition tests between variations (and control group, if relevant), in which ultimately one variation will be declared the winner with high confidence levels.

With automatic allocation (also called dynamic allocation or multi-armed bandit method), the highest-performing variation is gradually served to a larger percentage of visitors as more data is collected. Over time, the system dynamically routes traffic to the best performing variation based on available data. This means, even if variation A is the best performer today, a month from now, a different variation may potentially outperform it.

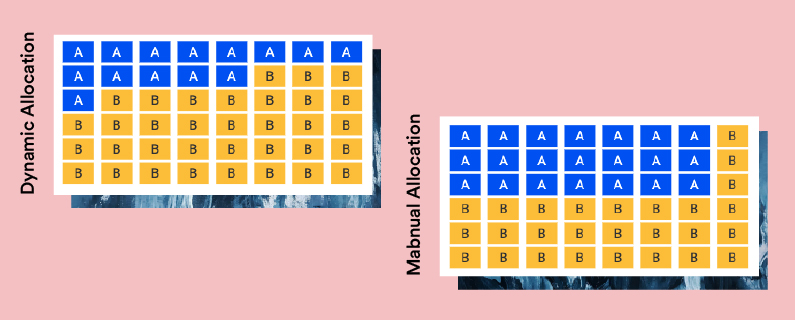

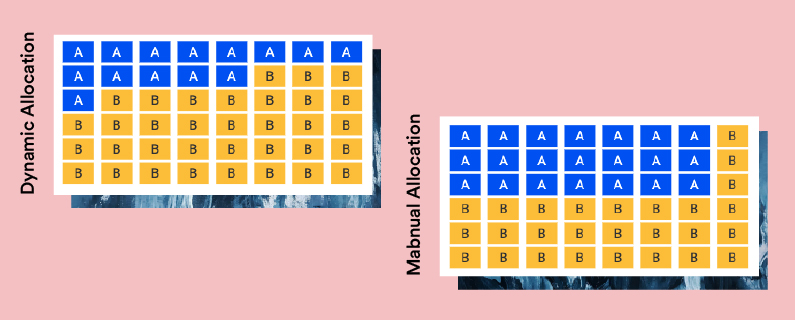

The following diagram illustrates the manual versus automated traffic allocation behavior over an ongoing experiment between two variations, where a decision is required by the eighth day:

Each of the traffic allocation methods is optimized for distinct use-cases. Ask yourself which of the following assertions better describes the use case at hand:

1) I am looking for the best variation so I can present it to all users in the long run. In this case, choose manual allocation.

Use case example: Layout and UX changes.

2) I am looking to make the most out of several variations during the limited time the test will run. In this case, choose automatic allocation.

Use case example: Promotions on the hero banner.

Manual allocation should be used when statistically significant results are required for making the decision to carry out a stark permanent change to the website, and time is not of the essence. Manual allocation tests can run for as long as required collecting data that will result in highly conclusive statistically significant results.

The downside of such tests is that while you are waiting for significant results – which may take time – there is no exploitation of the data collected. Visitors will still be exposed to the poor-performing variations in the mix. In cases where promotion variations are updated frequently, there may not even be enough time to reach significant results, and therefore, any optimization opportunity is lost.

If you are managing campaigns in which the variations have a short shelf life, or if they change and are updated frequently, then multi-armed bandit is the optimal way to go. Automatic allocation has a much higher exploit rate of readily available data and is much more aggressive when driving traffic allocation decisions. Automatic Allocation knows to weigh in on new variations, variations that perform differently, different time periods, and more.

A/B testing and optimization should be viewed through the lens of time – you either have it, or you don’t. To explain a little further, when looking to test the impact of a long-term change, such as a new page layout, or for the purpose of this post, an email capture message, one wouldn’t want to prematurely make a big or strategic decision without the data to support it. Doing so could have major repercussions on KPIs and the overall customer experience.

Given that, a test would need to accumulate enough data about the variation so the team could confidently declare a winner, a process that can take, at minimum, two weeks. In this case, should a business have the luxury of waiting for statistically significant results, an A/B test is ideal.

But what if the lifespan of a variation is short and there’s isn’t time to wait for a winner? In a scenario where a hero banner is changing on a weekly basis, for example, during a sales event, the main objective is to increase a particular KPI by engaging users with the better performing variation.

Sending traffic to a losing variation, therefore, actually reduces CTR, conversions, or whatever other primary metric is being used to measure the test’s success. This is exactly why automated allocation is well suited for shorter-term decisions, seeing as the variation driving the highest results is served more frequently, allowing teams to optimize conversions at a much quicker rate.

We can think of dynamic allocation in terms of Explore vs. Exploit, which addresses how much is “wasted” on learning and the opportunity to capitalize on what has already been learned. Because 10% of traffic in dynamic allocation is always served to a random variation and 90% to the winner, the 10/90 rate of Explore and Exploit allows for traffic to be directed to the leading variation while the algorithm continues to learn about “losing” variations, allowing them to bounce back.

In the end, the two traffic allocation methods available provide businesses with the flexibility to gain the intelligence needed for sound, long-term decision-making as well as the power to optimize on the fly. Not a question of one or the other, manual and dynamic allocation should both be used for testing, and hopefully, this article helped to clarify when exactly to do so.