Turning your customers’ mobile pain points into purposeful experiment design

It’s time for mobile to play a more prominent, if not a starring role, in your optimization and personalization program.

Summarize this articleHere’s what you need to know:

- Mobile usage is skyrocketing, making it crucial for businesses to optimize the mobile experience to drive success.

- Identifying and addressing customer pain points is key to optimizing the mobile experience. To do this effectively, a “pain point-first” approach is recommended.

- This approach involves conducting in-depth customer research to understand their behaviors, motivations, and goals. This research lays the foundation for segmenting customers and identifying common pain points.

- Once pain points are identified, they should be grouped into buckets for prioritization. This helps businesses focus their efforts on addressing the most impactful issues.

- By understanding customer pain points, businesses can design targeted A/B tests and personalization strategies to address them. This can lead to measurable improvements in conversion rates and revenue.

A lot has changed in the past 10+ years in the A/B testing and personalization industry. That is true especially in mobile. Let’s focus on the tactical aspect of solving mobile customers’ pain points as key to your experimentation program’s success.

Mobile has gone from totally overlooked to “mobile-first” everything, and then “let’s kill two birds with one stone and go responsive” (my thoughts on that in a later blog post). But mobile is a grown adult now and it’s time for it to play a more prominent, if not a starring role, in your experience optimization and personalization program.

Statista.com data reveals mobile phone usage is on the rise. In 2018, 52.2% of all worldwide online traffic was generated through mobile phones, up from 50.3% in 2017. In addition, Google consumer insights research found that 89% of people are likely to recommend a brand after a positive brand experience on mobile, with 46% of respondents saying they would not purchase from a brand again if they had an interruptive mobile experience. To summarize the data: if you aren’t including mobile in your experimentation efforts, you’re losing customers and money.

I’m guessing you’ve read this far because you want to know the secret to extracting more out of your current experimentation efforts – more data, more insights, and perhaps most importantly, more wins. Now that I’ve revealed the headline, it’s time to get into the details.

Identifying mobile pain points

Aggregate client ROI data from surefoot.me reveals identifying customers’ mobile pain points and designing experiments to address them are critical to program success and customer happiness. This approach has led to measurable lifts in overall win rate and program success for our clients, and I’d like to share how it can do the same for you.

In order for you to understand our “pain point-first” experiment design methodology, I first need to explain that we begin every client engagement by conducting deep customer discovery research and identifying specific personas.

We go beyond the standard “male, age 35, median income”-style demographics and analyze a variety of different information including:

- User tests

- HotJar recordings

- Customer service transcripts

- Surveys

- NPS scores

- Google Analytics data

And more to gain a better understanding of customers’ behaviors, motivations, goals and problems. We’ve even talked to real, live people on the phone (?)!

Once we understand our clients’ customers on a deeper level, we break them down into various segments based on things like:

- Device type

- Regional location

- Gender

- Browsing behavior

- Etc.

Turning pain into purpose

We’ll then shift our focus to identifying segment-specific pain points. Often, themes emerge as customers struggle with the same things, so we group those themes into “buckets” for easier test prioritization. For example, if we’re observing customers struggling with a checkout form validation, we might group them into a “Form UX” bucket.

When the pain point discovery process is complete, we step back and assess our “pain buckets.” The most common pain points reveal themselves and it’s usually quite obvious what we need to tackle first via experimentation. As an added bonus, this process can make test prioritization less painful (har har) by replacing emotional, opinion/HiPPO-based prioritization with data.

I’ve been told that pictures are worth a thousand words, so below are a few low-fidelity mockup examples that illustrate mobile pain points we’ve identified and how we solved them through experimentation:

Use case 1: product on the mobile homepage

Pain point: Through a combination of Google Analytics and HotJar data, we observed that mobile homepage visitors who didn’t use the hamburger nav to begin shopping weren’t engaging with homepage content and were more likely to exit the site.

Solution: Products were added to the mobile homepage, directly beneath the homepage hero section, to establish visual confirmation and trust that visitors were on the right site and enable them to begin shopping faster.

Results: 3% lift in visits to PDPs, 5% lift in AOV, 2.2% lift in revenue, all at 95% statistical significance.

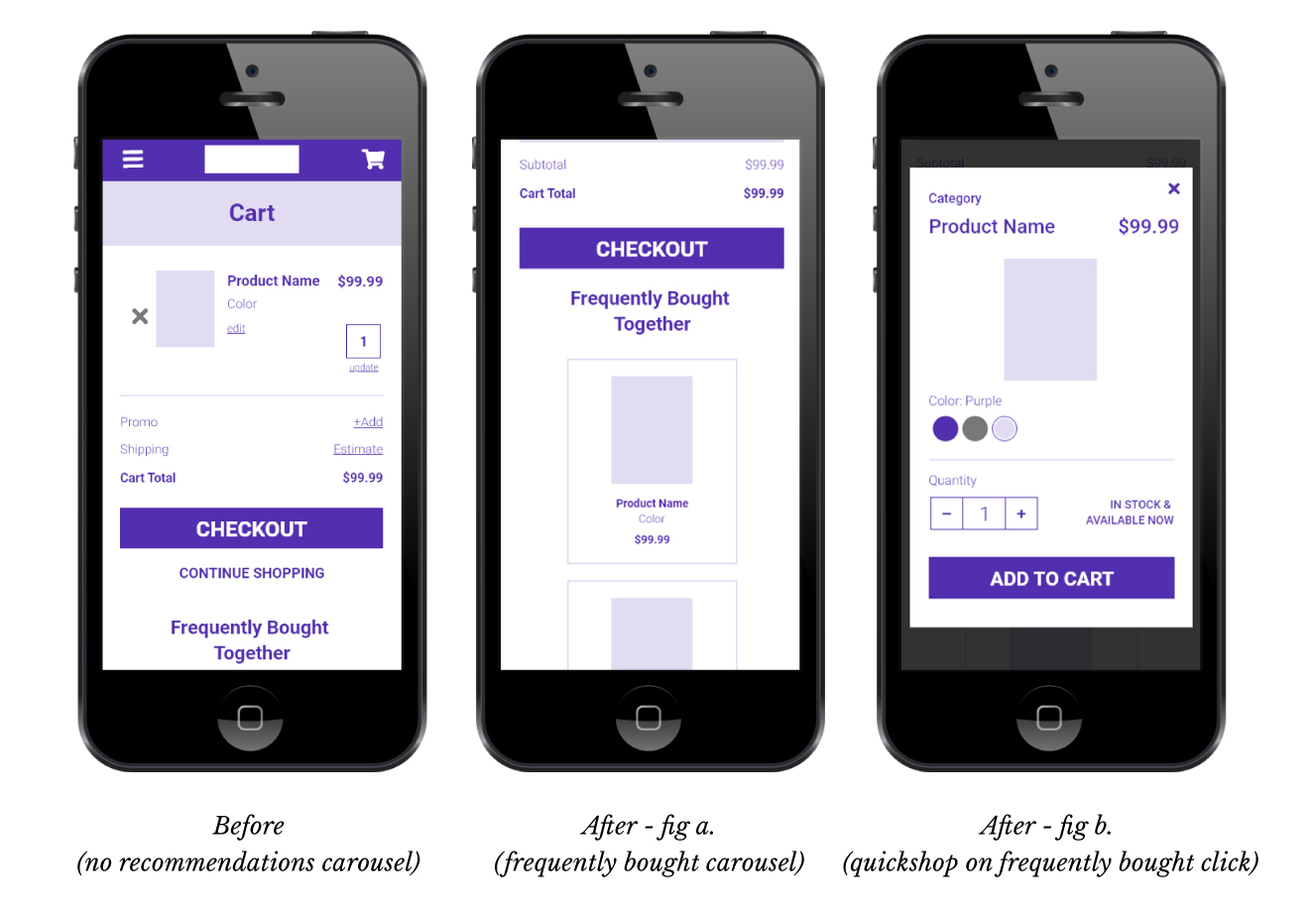

Use case 2: frequently bought carousel with quickshop

Pain point: Through a combination of HotJar recordings, client data and user interviews, we learned that mobile visitors were using the cart as a “comparison tool” to view, add and edit their product selections (aside: this is something we’ve seen across clients and is well-documented in UX research). As part of this workflow, visitors often went backwards through the conversion funnel to continue shopping, which posed risks to the end transaction and negatively impacted revenue and average order value (AOV).

Solution: Added Dynamic Yield’s “frequently bought together” recommendations widget at cart and, as an added bonus, created “quickshop” functionality so visitors could add additional products to cart without having to go back through the conversion funnel.

Results: 2.5% lift in cart adds, 3.4% lift in AOV at 95% statistical significance.

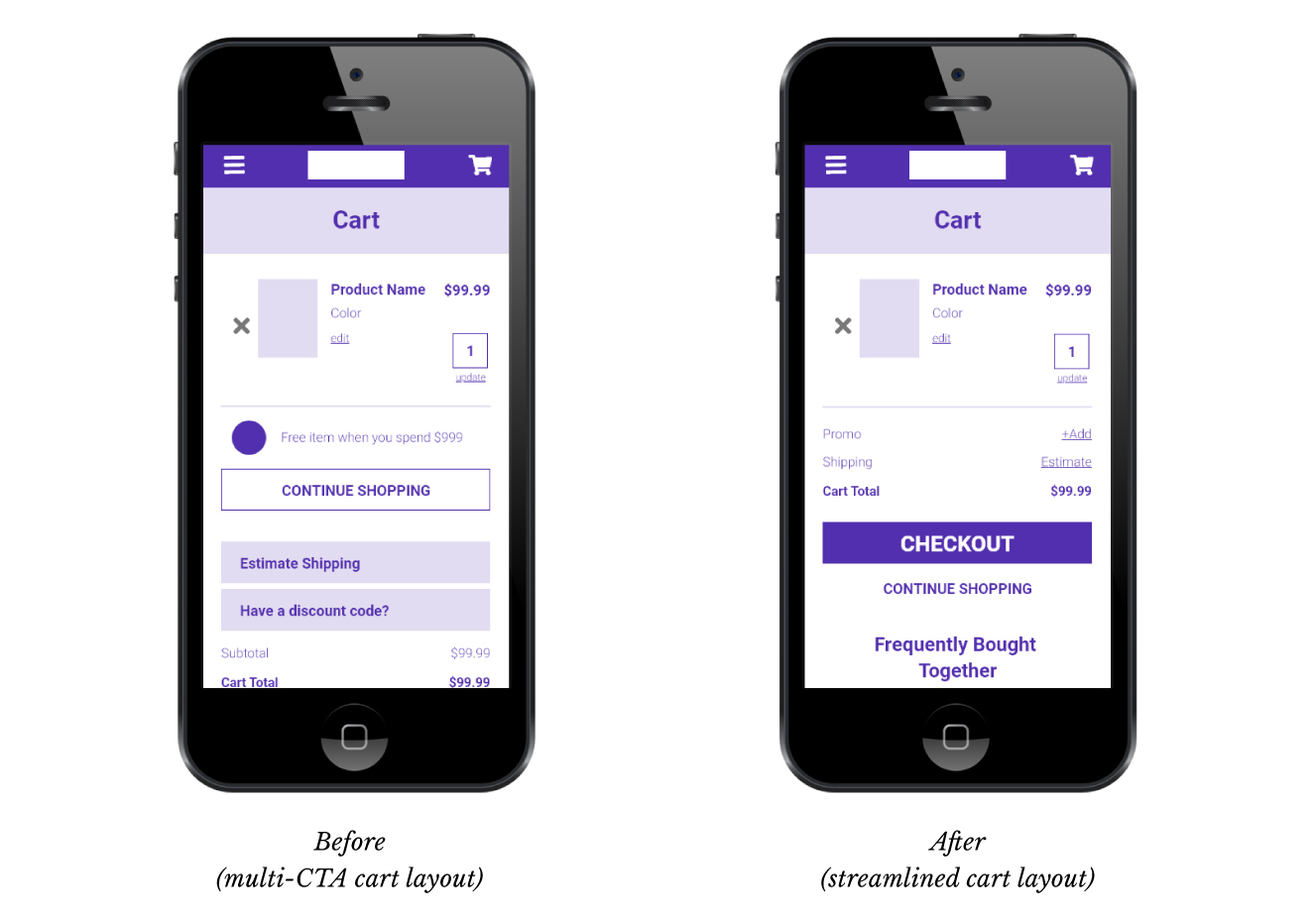

Use case 3: streamlined mobile cart

Pain point: Through a combination of HotJar recordings and user testing, we identified that many mobile cart visitors were overwhelmed by multiple call to actions (CTA)’s competing for their attention. The biggest offender was the “Continue Shopping” button, which visitors overwhelmingly mistook for a “Continue to Checkout” button, as their default behavior was to tap the first large button they saw. Many were extremely confused when they were taken back to shop, wondering if the site was broken and also revealing that they didn’t read the CTA and had no idea what they tapped.

Solution: Streamlined the cart design by eliminating competing CTA’s and made the checkout button the boldest, primary CTA.

Results: 3.1% uplift in checkout visits, 3.5% conversion lift, 4.5% revenue lift, all at 95% statistical significance.

Customer-first = mobile-first

If you want to derive maximum value from your experimentation program, I encourage you to start by reconnecting with your customers and gaining an understanding of their pain points. Especially on mobile devices, where brands typically see more traffic but experience barriers to conversion.

Once you more fully understand your customers’ problems, you can begin solving them through thoughtful experiment design via personalization and experimentation solutions like Dynamic Yield that enable marketers to deliver highly targeted experiences.

So what are you waiting for? Stop reading and go talk to your customers!